Reliable Rationality Routines

Investigating improvements to the interface design of applied rationality

Joar Granström / April 2022

–

Version 0.1 (early access)

People

interested in moderately in-depth research on ways to improve dynamic media design that help people grow and maintain effectiveness at rationality

(as

defined on LessWrong

).

This interest seem to strongly correlate with, but is not limited to, an interest in the

Effective Altruism movement

.

The article is also for people who have tried the

Instrumentally

prototype

and are curious about more nuance on the reason behind its design and its vision for future development.

Don't identify as the assumed audience?

Explore alternatives

or

Try exploring the short invitation below ⬇

Invitation

"How we spend our days is, of course, how we spend our lives."

"A tool converts what we can do into what we want to do."

TODO: link to EA causes and value

TODO: rewrite intro when article is more finished

It is hard to effectively choose and maintain your knack stack (referring to the process of choosing a

tech stack

in software development, but for choosing which methods and beliefs you let

pay rent

on the limited space of attention in your mental processes).

Even if we use the best available

Tools for Thought

, we might not use among the best available methods while using the tools, even if we've read about the methods at some point.

We could support people aspiring to effectively improve the world to be able to create more value by improving infrastructure and instruments to reinforce them to more effectively

explore

,

practice

/

maintain

,

apply

and

share

their

reliable rationality routines.

No matter current level of skill, all people need reinforcements to be able to grow and maintain a high level of rationality expertise.

Improved system designs in form of infrastructure and instruments could further enhance human capability by making growth and maintenance of expertise more reliable.

This design project's focus is to

scout

for design that could increase the number of, the reliablility of and the effectiveness of individually and collaboratively striving aspiring effective altruists (or adjacent) that are deliberately trying to improve the world for the long-term future of life.

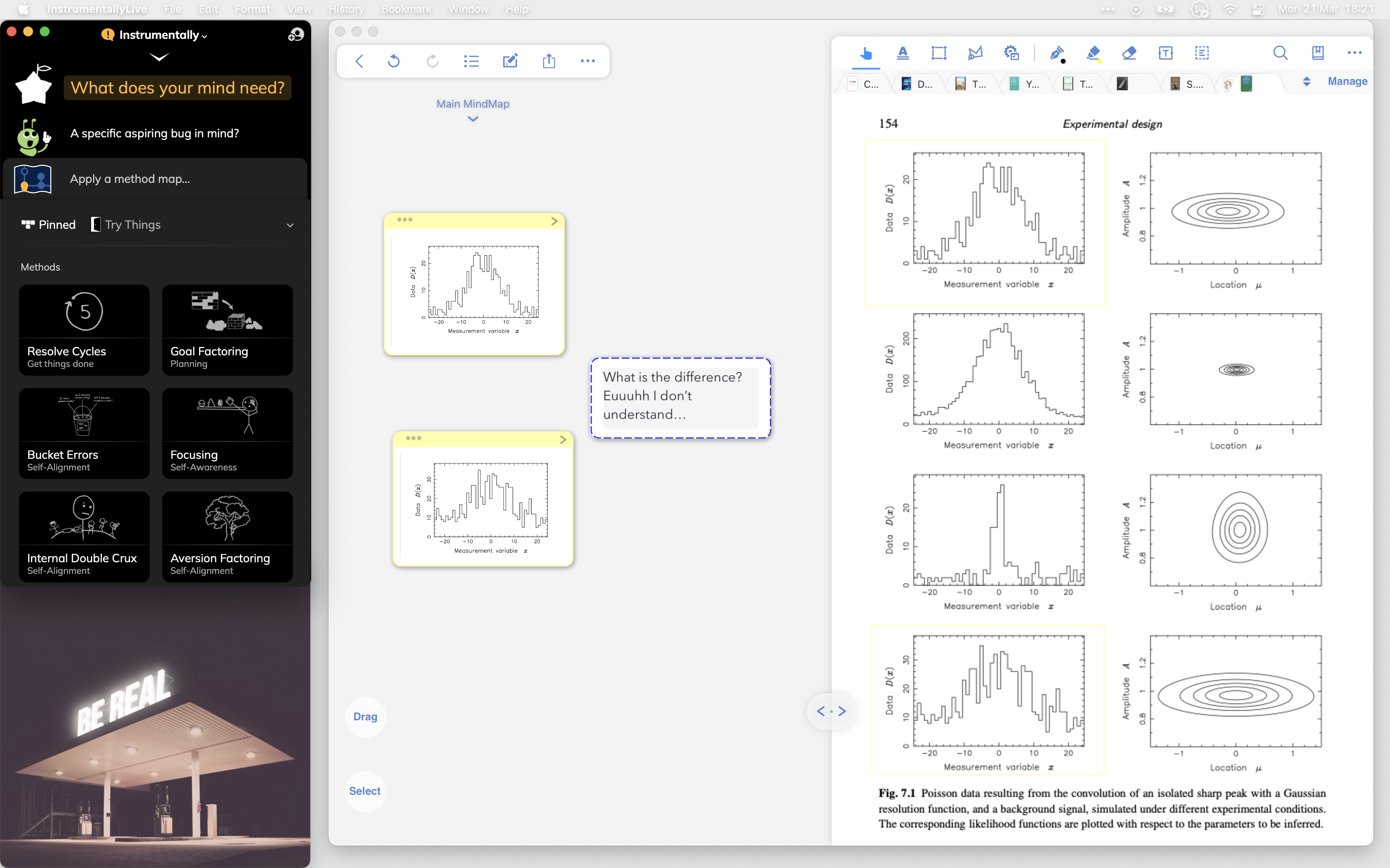

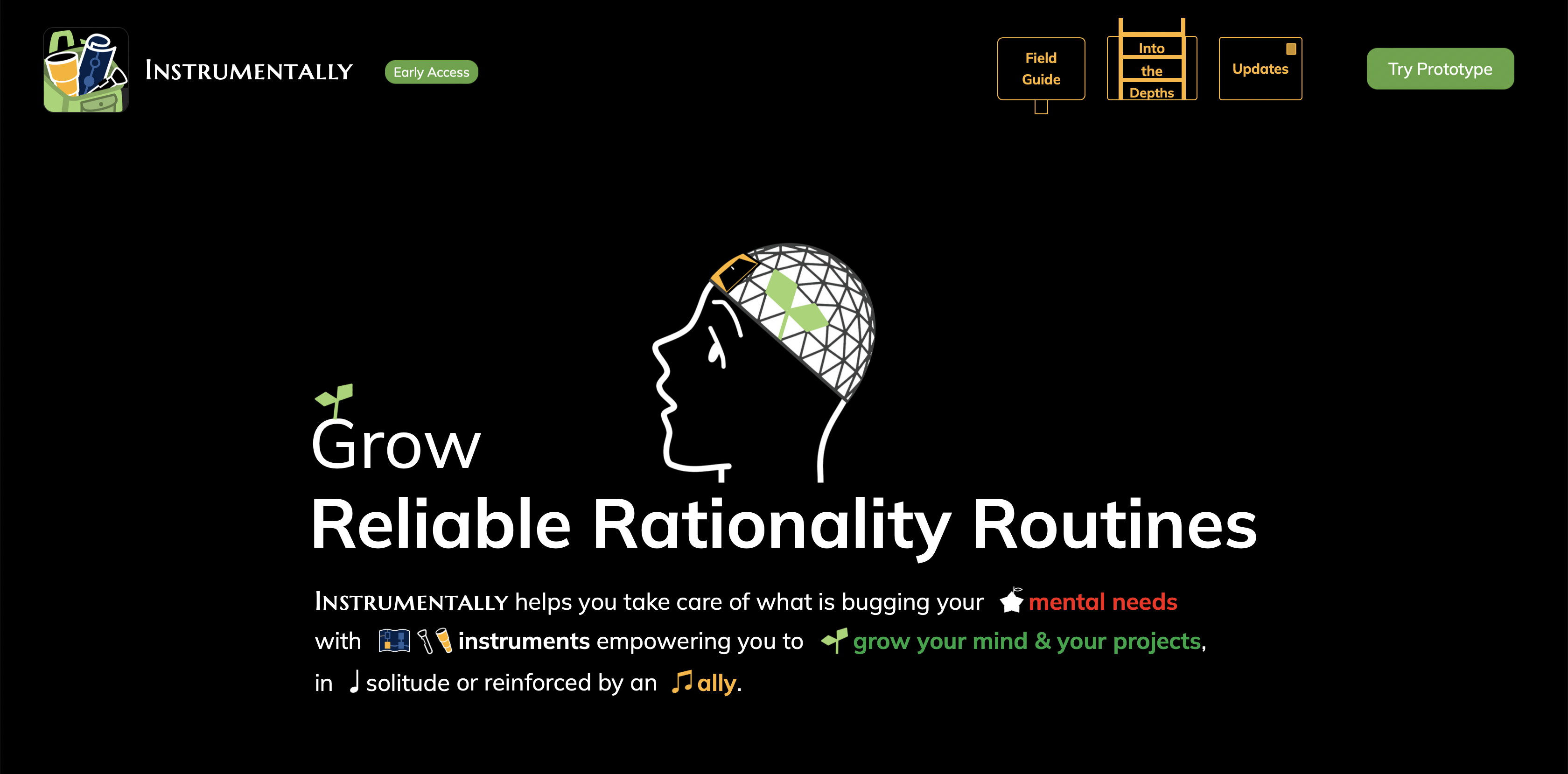

As early steps toward a larger vision of reliable rationality routines, the prototype called

Instrumentally

has made some development progress.

Instrumentally helps you use rationality informed methods to model and problem solve what is bugging your mental needs, during work and life projects.

People often mention the concept of a minimum viable product, but for long-term research launching a system as soon as possible isn't always the best approach;

premature scaling can stunt system iteration

.

Instrumentally might be developed for production later with potentially large revisions, but so far the prototype work has prioritized scouting for potential virtues of reliable rationality routine interfaces that are feasible in the short-term but can grow for the long-term.

Contents

In this invitation, an initial simplified model of what this work is about was presented as well as this map of the article's contents.

You are

here

In the introduction, reliable rationality routines will be defined as well as some challenges of growing and maintaining such routines. Then some gaps in current designs and whether interface systems could help fill the actual-ideal gaps of reliable rationality routines are considered. Lastly, an initial preview of a prototype created to help explore the design space is presented, early steps on a larger plan of attempts to close the actual-ideal gaps of reliable rationality routines.

In the scope section, expectations of motivations behind making the project and current thoroughness is made more transparent. Potentially important but not yet prioritized work to be done is listed.

The Prototype Project section is a show & tell of the prototype Instrumentally, including both a higher fidelity prototype and low-fidelity design sketches of some possible future capabilities of the Instrumentally system.

In the Improvement Opportunities section virtues of reliable rationality routine interface systems are presented. A few key more abstract design principles important to the vision of Instrumentally are presented in more depth.

The Potential Utility chapter points to big picture values and cause areas (tied to Effective Altruism) that the vision of reliable rationality routines aspires to align to.

A few sub-factors to those causes are described, to clarify the chain of value down to the prototype.

The Summary is a reference of the article's key points, probably easier to understand deeply if you explore the article first.

Thanks to supporters and inspirations.

An invitation for you to join the cause, either by scouting the robustness of this project's goals and plans, by funding further research and/or by building better design.

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Opening the Door

To introduce the project, we'll explore some potential goals of reliable rationality routines, obstacles to those goals, and plans of early steps to close the actual-ideal gaps.

Reliable Rationality Routines

The idea of reliable rationality routines captures a big part of the direction of this project. Here is what I mean by it:

Reliable ≈ Used & Effective

Used

≈

Agency

or

grit and work ethic

, with help of

systems

designed for people

, to more likely actually apply rationality informed methods

at well-suited opportunities.

Effective

≈ Applying high expected value processes

(the approximately best alternatives that communities like

LessWrong

,

Effective Altruism

, science

and expert fieldworkers

have explored so far).

This involves using well-designed instruments that extend human capability.

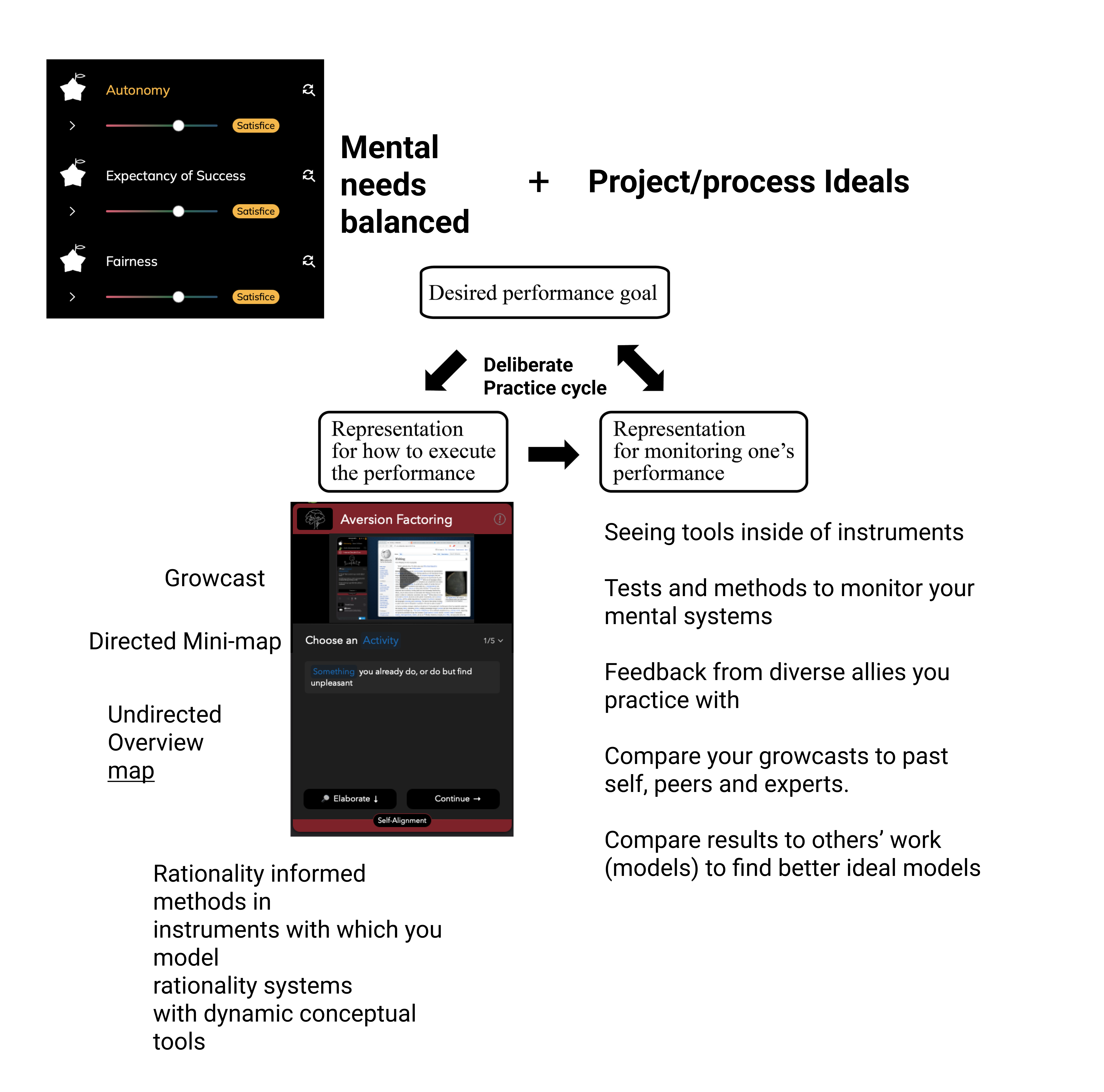

This also involves balancing psychological short-term needs to fuel reliable continued growth of long-term value for oneself and others.

Rationality =

LessWrong definition of Rationality

It is common for people to have different definitions of the word rationality, as Julia Galef describes in her talk on

Straw Vulcan Rationality

.

Which definition of rationality is most common or right isn't the point, the point is that we're communicating about the same thing.

It's beyond the scope of this article to argue for the value of this definition of rationality.

If you're skeptical, try exploring the definition on LessWrong for just a minute. It enriched my life.

Rationality informed methods to gain a clearer understanding of the world and to improve prioritization in your decisions to actually create more value in the world (possibly including both personal value and altruistic value).

Routines ≈ Practice / Maintain and Perform

during projects

, sometimes in solitude, sometimes shared.

Practice

≈ Learn effectively through

deliberate practice

-drills on specific skill gaps, with feedback.

Maintain

≈ Update yourself and your environments (habits, methods, instruments, collaborations, aspirations, systems, etc) to continue to have an actual high level of rationality expertise

.

Perform

≈ Apply skills in valuable projects (self-improvement & world-improvement) through

deliberate performance

.

During projects

≈ Take care of what is bugging your mind directly ( turbocharge! )

, using methods through instruments at well-suited opportunities in-context of your work and life projects, not only during one-time events such as courses or workshops.

Shared

≈ Transparancy and openness in showing processes and systems one actually uses as well as aspirations for more ideal systems.

Main purposes of this: (1) To receive support to cope with reality, (2) receive feedback to effectively update and (3) to share less lossy process information for the community of aspiring world improvers to update their processes from.

"Those who work with the door shut"

...

"don't know what to work on, they're not connected with reality"

...

"I cannot prove to you whether the open door causes the open mind or the open mind causes the open door"

...

"The guys with their doors closed were often very well able, very gifted, but they seemed to work always on slightly the wrong problem".

Challenges of growing and maintaining reliable rationality routine expertise.

Long-time experienced practitioners of programming may be loss aversive and therefore skeptical

of

newer initiatives

to

improve programming

to be more accessible yet still powerful.

Similarly there is a risk for the

aspiring rationality community

to suffer from

the curse of knowledge

and forget how inefficient it was to learn to apply rationality when one was less experienced.

Rationality is so difficult that even after much time and effort of trying to learn I expect we all find our actual-ideal gaps bigger than we'd like, at least for some of the following challenges.

A sample of the challenges

• In knowledge work there exists a lot of possible methods to use and lots of patterns

(

often hard to notice

)

for where the methods could be applied. Reliably noticing patterns and recalling methods is hard in all of the noise.

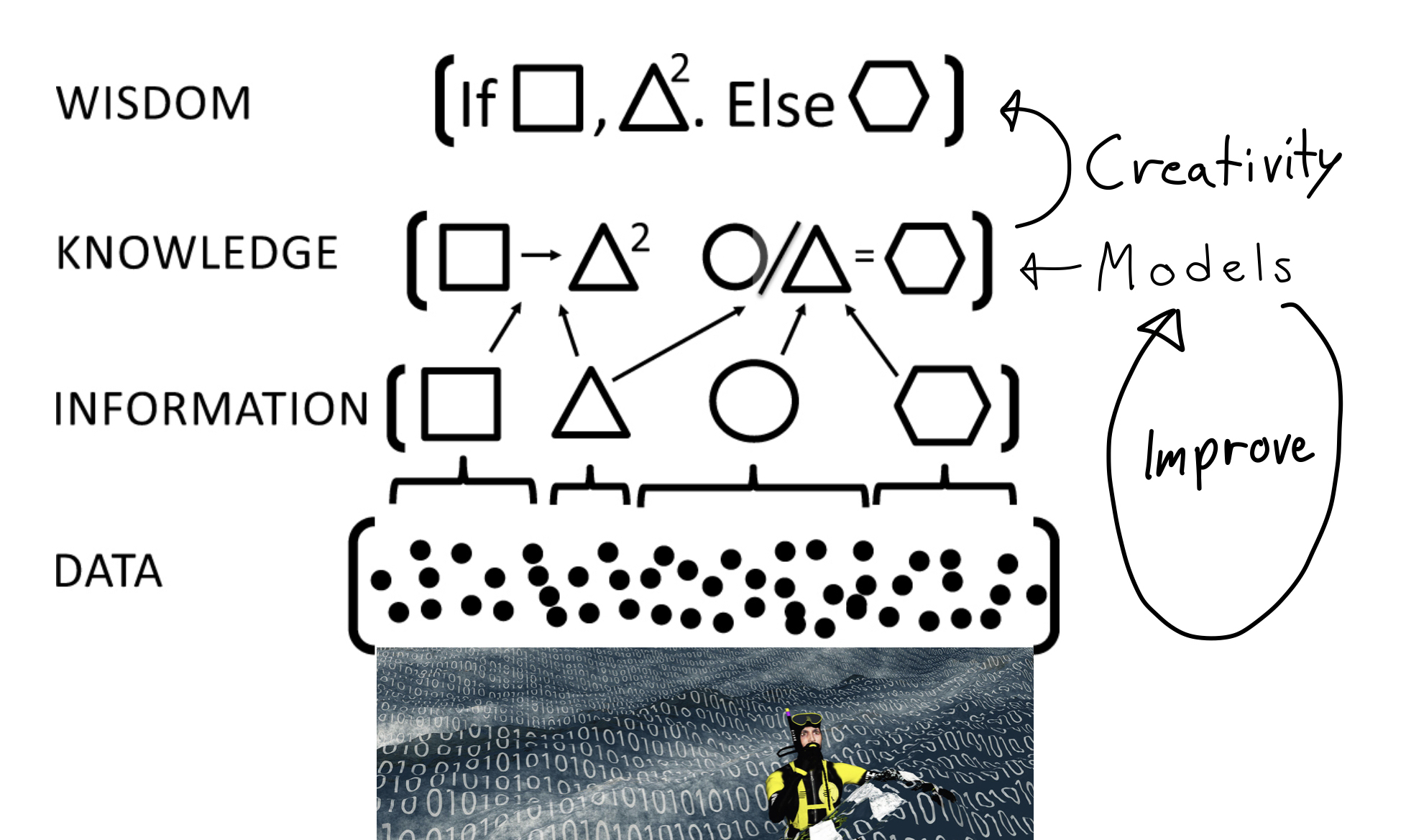

How models transform data into wisdom

The image comes from the book

The Model Thinker

.

I added the scribbles on the right. Improving models also includes creativity, but the most difficult creativity (not necessarily innovation) might be to understand how to combine knowledge to properly take action/design for different situations.

I also added the diver, for fun and to refer to the metaphor of swimming in a sea of uncertainty from the book

Transcend

.

• It is hard to effectively choose and maintain your knack stack (referring to the process of choosing a

tech stack

in software development, but for choosing which methods and beliefs you let

pay rent

on the limited space of attention in your mental processes).

Even if we use the best available

Tools for Thought

, we might not use among the best available methods while using the tools, even if we've read about the methods at some point.

Knackpack

, as a more concrete alternative framing to knack stack.

Powerful tool

Ineffective method

• To effectively

update our motivation to remove self-deception

and work on projects of higher expected utility.

Reliable motivation calibrated to truth is an emergent property of well grown and maintained mental systems.

These systems are invisible and thus very hard to find clearer understanding of without spending a lot of time studying the cognitive science.

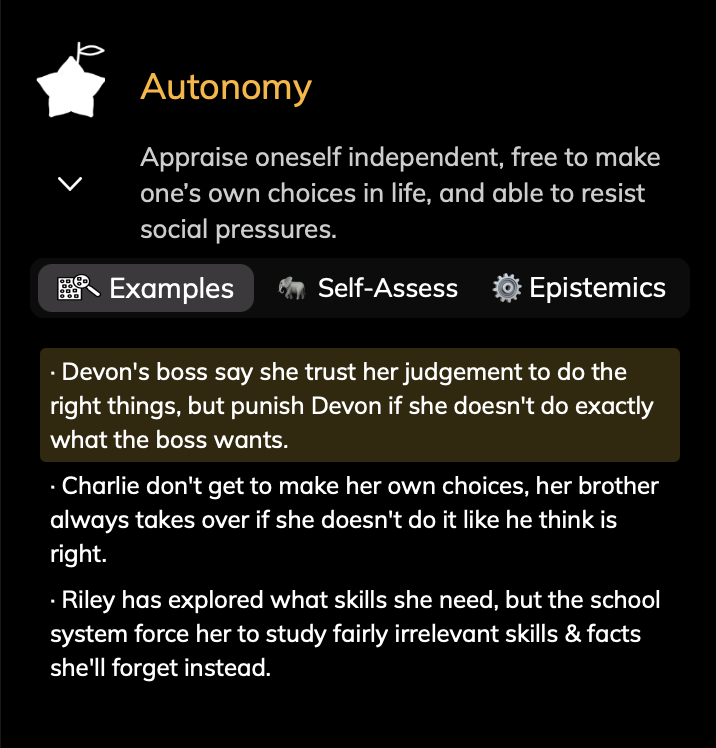

People are left on their own to figure out their mental needs are and how to effectively fulfill them.

To help people more reliably have a truth/accuracy-seeking mindset (

scout mindset

) we need to provide design that helps them in real-time model and understand how to balance mental resources, e.g emotions of comfort and self-esteem and social needs of belonging.

• Gain fruits from and contribute to make a world in post-scarcity of effective

rational compassion support

.

To maintain and grow ourselves and the world we need both well designed challenge & well designed support.

Some top performers are really skilled at rational compassion support, but they have limited time. How do we create a scalable system where all aspiring effective altruists receive high-quality rational compassion support?

• To

see

the work process (not just work outputs) of Effective Altruism / rationality experts is hard since that information is a scarce resource for a large part of the community that doesn't fit in the loop.

Text and podcasts that only use natural language lossily compress the deep models of experts and often are not about process as much as describing topics.

Improving the world isn't a competition, yet there is little design helping us efficiently spend a sample of our time to share our wisdom in nearer-lossless formats so people can learn from each others' processes.

• Doing effective

deliberate practice

for knowledge work to keep our routines more reliably effective.

• People are by nature biased toward new information that is low effort to take in. Practicing to apply the applied rationality basics well is also important but effortful and thus less likely to occur continously without improved design.

show more challenges

Rationality verbs, some more reinforced by design than others

Inspiration for the concept of rationality verbs came from game designers who discuss which verbs players play with as they interact with a game design, e.g. "press the A-button to make Mario jump", "look at the

mini-map

to orient and decide where to go next" or "put a card from your hand onto the table".

Similarly for rationality, to show & tell an effective rationality process we need verbs, behaviors/procedures an aspiring rationality expert does well to be considered more rational.

The point isn't that the verbs should be exclusive to rationality, merely a help to understand what kinds of activities a rationality expert actually usually uses.

As will be discussed more later, it matters which method you use to do the verbs.

If better alternatives were to come along which fulfill the same goals / constraints, then we should adapt. Verbs are important, but particular verbs can be factored or updated.

Some rationality verbs are more salient in the community than others:

Know of a better taxonomy/ontology where the verbs e.g. doesn't intersect as much or are on a different more useful level of abstraction?

Your feedback is welcome, so we can improve:

Forecasting / Predicting

Betting

Modeling

Exploring

Updating / Calibrating / Orienting

Aligning motivation to truth

Taking Action

Deciding / Prioritizing / Planning

Reasoning

Self-directed Behavior Change

Measuring

Reordering values/aspirations

Evaluating / Scouting / Testing / Reviewing

Expanding comfort-zones

Coping

Resting

Supporting / Reinforcing

Communicating / Explaining

Coordinating / Cooperating

Showing process

Serving / Helping

Resource Gathering / Maintenance / Spending

Creative problem solving

Designing / Systemizing

Engineering / Building / Making

Automating

Optimizing / Improving

Experimenting

Learning / Practicing / Doing Scholarship

Debiasing

Focusing

Observing / Noticing / Monitoring

Instrumenting (using tools)

Explore more rationality verbs...

In the last decade forecasting has been made more visible both in communication channels of communities of people trying to improve the world effectively (e.g.

Effective Altruism

,

LessWrong

)

but also made more visible in with affordances in tools like

Guesstimate

.

I think there are more rationality verbs that could benefit of getting a similar treatment.

Design could be improved to help us balance our time spent on different verbs, to optimize (better approximate) what actions would

be most useful to us

on the margin

.

Interfaces as reliable rationality routine reinforcements

"The structure of things-humans-want does not always match the structure of the real world, or the structure of how-other-humans-see-the-world. When structures don’t match, someone or something needs to serve as an

interface

, translating between the two."

Nielsen and Carter concludes

that interfaces are important because "interface design means developing the fundamental primitives human beings think and create with", not just about "making things pretty or easy-to-use".

Good interfaces empower people to do behaviors in processes that enhance the value they can create.

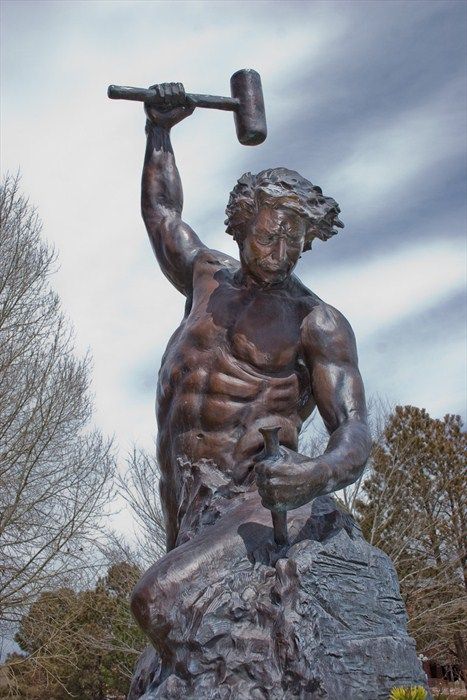

Self Made Person

LessWrong Source

A metaphor that many in the LessWrong community associates with rationality.

I added the comic bubbles.

Instruments

for

improving

our capability

to serve

the world.

"A tool converts what we can do into what we want to do."

This project has scouted the design space of reliable rationality routines in search for important design properties that could improve our instruments and the infrastructure around them.

Let's explore some aspects of the current design that could be improved.

Ideal

Gap and improvement opportunity bugging us

Actual

A few limits of the status quo interfaces

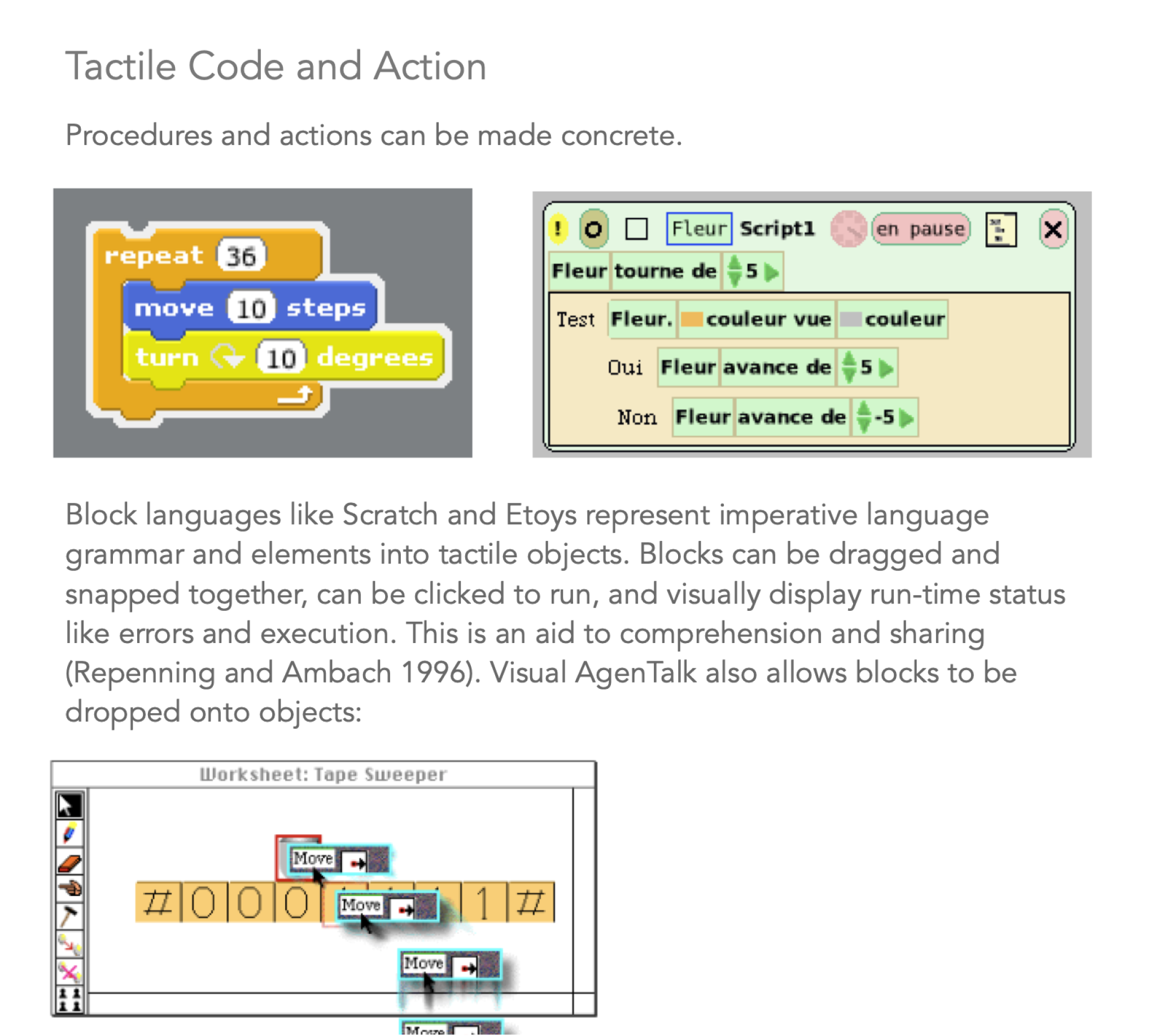

One reason that knowledge of rationality verb systems is hard to share so that more people actually use them, is because we still mostly communicate with textual representation of knowledge, even if we have powerful computers capable of dynamic media,

as Bret Victor effectively explains.

"The wrong way to understand a system is to talk about it, to describe it, the right way to understand it is to get in there, model it and explore it, you can't do that in words. So what we have is that people are using these very old tools, people are explaining and convincing through reasoning and rhetoric, instead of the newer tools of evidence and explorable models."

Ozzie Gooen has also written about the

limits of modern communication.

It is understandable that natural language remains the status quo, people spend a lot of time learning to read and write, and natural language is expressive.

Learning to author in new formats takes time and effort, so it's easier to use textual/symbolic representations of knowledge as a

lowest common denominator

format.

Unfortunately a one-fits-all approach often results in far from optimal effectiveness for many rationality verbs.

Combining text with dynamic media opens a door to new capabilities.

Even with truly

active reading

, information isn't enough for behavior change and effective learning. When the prominent environment design we present to people is texts, they probably mostly read texts, not necessarily practice the methods of the texts reliably.

The people who do practice are very motivated and are ok with spending a lot of time and effort to reinvent the wheel of how to design a good practice routine.

Motivation and grit for hard but useful skill building is good, but creating systems/methods/knowledge to make it easier is also important.

This provides a better starting point from which people can take the role of a scientist/scout to optimize from, to then later share what their improvements back to the pool of human knowledge.

To learn and maintain effective processes we need to understand how we could improve by getting feedback.

One way is to read a lot and then try to recall the knowledge in the right situation to test ourselves against those models.

This is hard, especially for novices.

Still, for novices and experts alike, to effectively grow and maintain our skills and our projects we need to

see

what we're doing.

Dynamic media to see, explore and deeply understand is not just empowering as

training wheels, as Bret Victor explains.

If we want people to use knowledge to model and solve problems, we need to make design that shows them how to do effective modeling, not just reading or

blindly following low-dynamic templates or recipes

that

limit expressiveness and freedom too much

.

The scientific paper template joke

Modeling tools aren't only for sporadic activities like modeling scientific paper communication, it can be for day-to-day decisions too. Even if we don't usually need full freedom and power, it's important to be able to take the escape hatch when we need it.

A few similar framings of this are

learning by modeling

,

insight-through-making

,

make to know

,

research through design

,

scholarshipping

,

understanding-through-building

or

"jump in and figure it out"

.

Many people could benefit from

good shortcuts

in the form of effective instruments and environments for modeling, understanding and creating.

The existance of good shortcut design would still be compatible with researchers continuing to push the boundaries with the vast power and freedom of mathematics, systems programming and natural language.

There has been attempts to create more software for procedural learning of rationality verbs, e.g.

Clearer Thinking

. Yet the design capabilities of the

guided track authoring tool

is still a small subset of the potential of the

dynamic medium

.

Clearer Thinking

courses are structured like forms or

wizards

which do have some strengths.

The format has served Clearer Thinking well in making a lot of mini-courses / method guides on useful topics, but we also need more powerful tools to further push the boundaries of capability empowerment, expressiveness for diversity, enjoyability

and more interface virtues.

These opportunities for improvement will be discussed more below.

(optionally:

jump directly there

).

What could a dynamic medium look like?

Bret Victor,

Media for Thinking the Unthinkable

There are a lot of additional limits on the status quo, for more depth explore e.g.

Bret Victor's work

, Andy Matuschak's research on

similar topics

and

Michael Nielsen's work

.

A few early steps toward a vision of reliable rationality routine interfaces

As a way to explore this design space I've partly taken the approach of

learning by modeling

(alternatively framed as:

insight-through-making

,

make to know

,

research through design

,

scholarshipping

or

"jump in and figure it out"

)

.

The prototype doesn't fulfill all the identified virtues of reliable rationality routines, but has helped make my hypotheses clearer by making a concrete visible design to consider how to fulfill those virtues for.

A later section will show early sketches of additional design ideas that could support additional virtues.

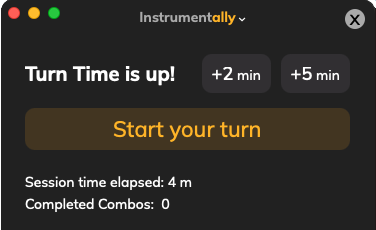

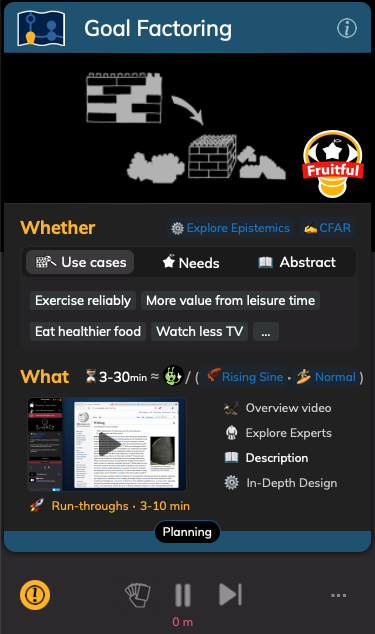

With Instrumentally you take care of what is bugging your mind, during work and life projects.

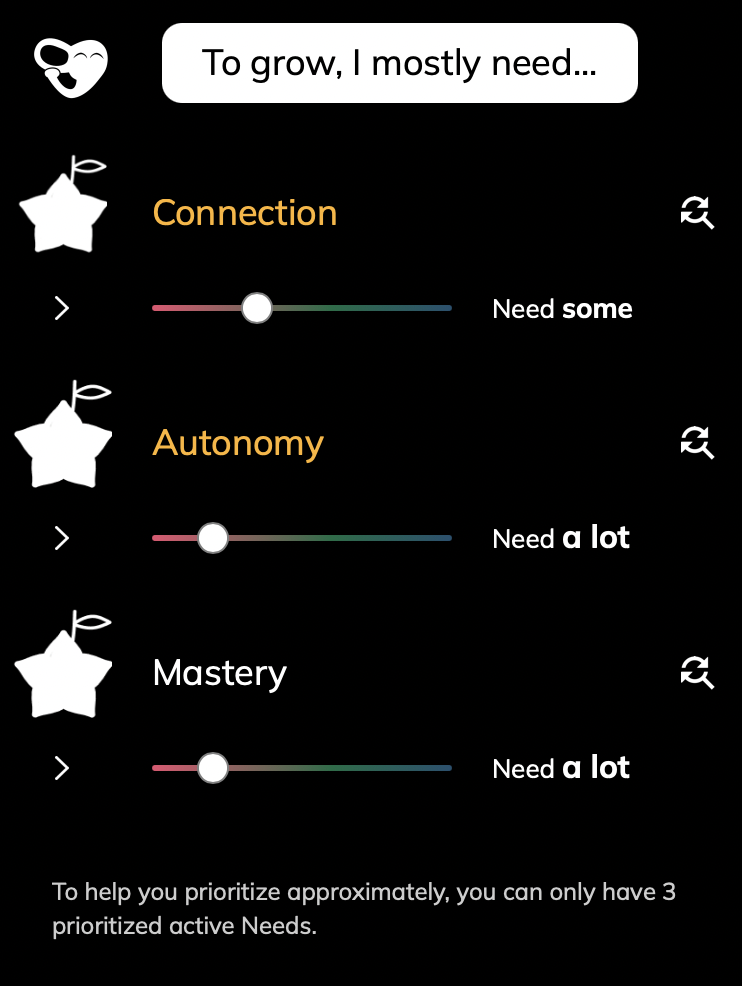

You model simple but useful models of what mental needs you currently have. Then you do rationality informed methods to balance your mind toward more long-term reliable mental states.

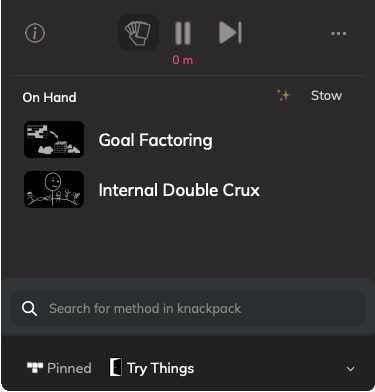

You launch rationality informed methods to inspire your moves and to launch powerful instruments to aid you as you explore and problem solve in your work and life projects.

To empower your problem solving you use multiple powerful tools on your computer together, instead of settling for a lowest common denominator

monolith application

.

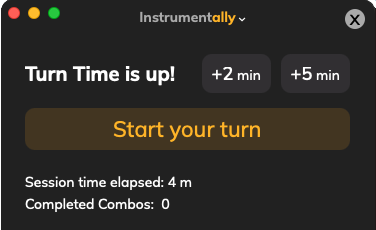

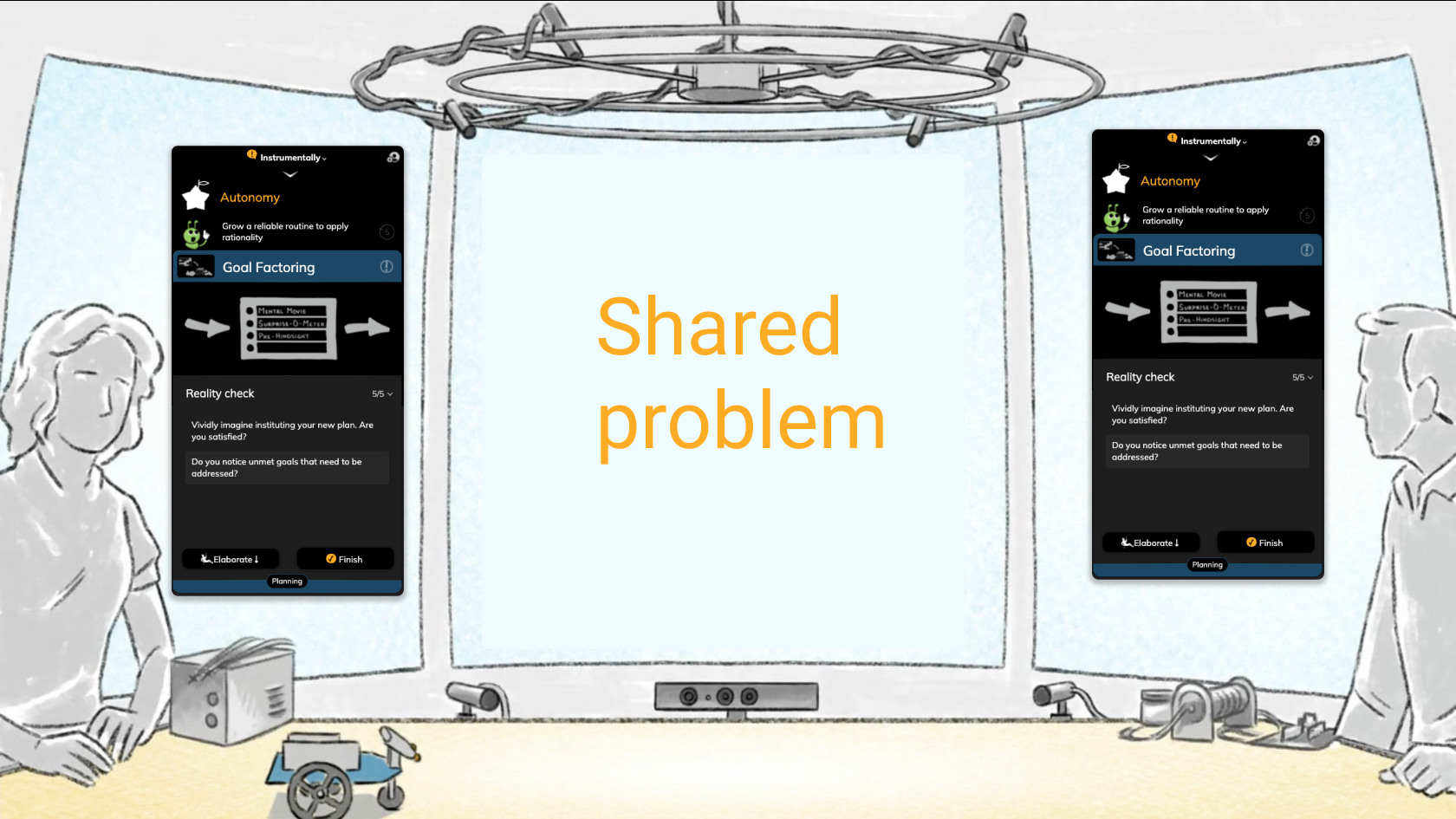

You invite allies and take turns to reinforce each other as you challenge yourselves to grow yourselves and the world for the long-term.

Your feedback is welcome, so we can improve:

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Scope & Expectation Requests

In the spirit of the aspiring Effective Altruism community, which mostly values openness, transparancy and honesty, I try to be clear about my intentions and uncertainties.

Expectation Requests

Switch to more nuance version

Motivation

I struggle to improve the reliability of my rationality routines, even though I'm very motivated to improve.

With compassion I see other aspiring Effective Altruists and LessWrong rationalists in my circles struggling with reliable rationality routines too. My circles are of course not necessarily representative of the whole community, but might be to some extent.

During my CFAR workshop attendance the idea of design was presented, how important it is to not rely on self-control. Yet little design to help us maintain reliable rationality routines is accessible for most people who are not in the loop.

So I want to figure out how to make more accessible systems to help more people improve their rationality routines, wherever they live, however far they've advanced their career and at their prefered pace.

Importantly, I view the improvement of reliable rationality routines as a community project, I'm just proposing some directions we might want to include to complement existing approaches.

I could write papers to explore and communicate theory on these problems.

This would have the benefit of being able to focus on approaches that could be better for the long-term.

Or I could design systems trying to improve the problems directly. These systems would probably be far from optimal, but they'd help people now and gather data of real-world use.

We need both approaches, and I wanted to do something in between with this project. Both providing value sooner and improving the design for the long-term.

If you notice any improvement opportunities of the article as you're exploring, I would truly love to know!

I've tried to take my responsibility of inviting feedback by reminding explorers throughout the article, like this:

Your feedback is welcome, so we can improve:

Rigor

As a side project for a couple of years and then full-time for about 6 months, learning/research and iteration has been done on the project. Not all ideas discovered are included in this article for brevity and because of difficulty of knowing what to prioritize including that would be most valuable for a more general audience.

Since I'm partly doing the project as skill building, quality is lacking on certain aspects that I'm practicing to improve on. I believe getting feedback from an incrementally increasing circle of disagreeable givers with scout mindset is better than polishing something too much before I've gotten reassurance from the community judgment that it is worth optimizing.

I'm aware I'm trying to tackle a hard question, and that my skills aren't enough to explore it as well as I'd like. Although I'm learning a lot, potentially somebody more skilled could distill, scout arguments, mentor me to think clearer about the cause or, at least partially, take up the baton.

Epistemic Status

Although I've been fairly time and resource constrained, I've based as many assumptions as possible in science (mainly cognitive science, rationality and interface design), fieldwork experts in interface design and game design as well as reasoning from good judgement people in the communities Effective Altruism and LessWrong. I will have misunderstood things, but am motivated to more thoroughly scout whether the approach is worth more attention or not.

Scope / Things I wont do or didn't do yet

• Explain all of the most important models I've used to support the design of the current prototype. I long for a future of systems in which you can learn about its design decisions and the conceptual tools (theory) and evidence used to make a robust case for those decisions, in-context of the system itself. Design is a messy process and I aspire to be more organized and thorough in presenting all models I've used in the future. For now, please reach out if you're curious about a specific design decision or if you have any improvement opportunities bugging you! I've done my best to base decisions in interaction design, more solid cognitive science theory, rationality principles from

LessWrong

and

CFAR

and from other expert fieldworkers such as e.g. Jonathan Blow and the people on the

Metamuse podcast

.

• Test on many users, so far only acquaintances and a few interface professionals have evaluated the prototype and the vision of the project. Part of the motivation for this article is to get feedback and readjust direction.

• Ship/launch the Instrumentally app (so far it's only aspiring scholarshipping, not actually shipped).

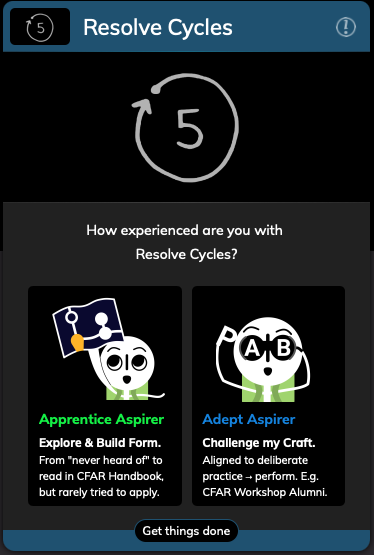

• Design for a general public. So far the prototype focus on helping people who have been to a CFAR workshop or read the handbook thoroughly.

Show more possibilities that weren't prioritized yet

You're at a crossroads

The What Walk

––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Start by exploring the concrete design, then move toward how this aspires to fulfill big picture values.

Choose if your

expectancy

of understanding abstractions is lower.

If not sure, choose this default path.

The Whether Walk

––––––––––––––––––––––––––––––––––––––>

Abstract

Concrete

Start by exploring the big picture value, then move toward more concrete ways to increase that value.

Both walks cover the same information, but in different order based on your selected need.

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

A Prototype Project

Early steps scouting for reliable rationality routine design improvements

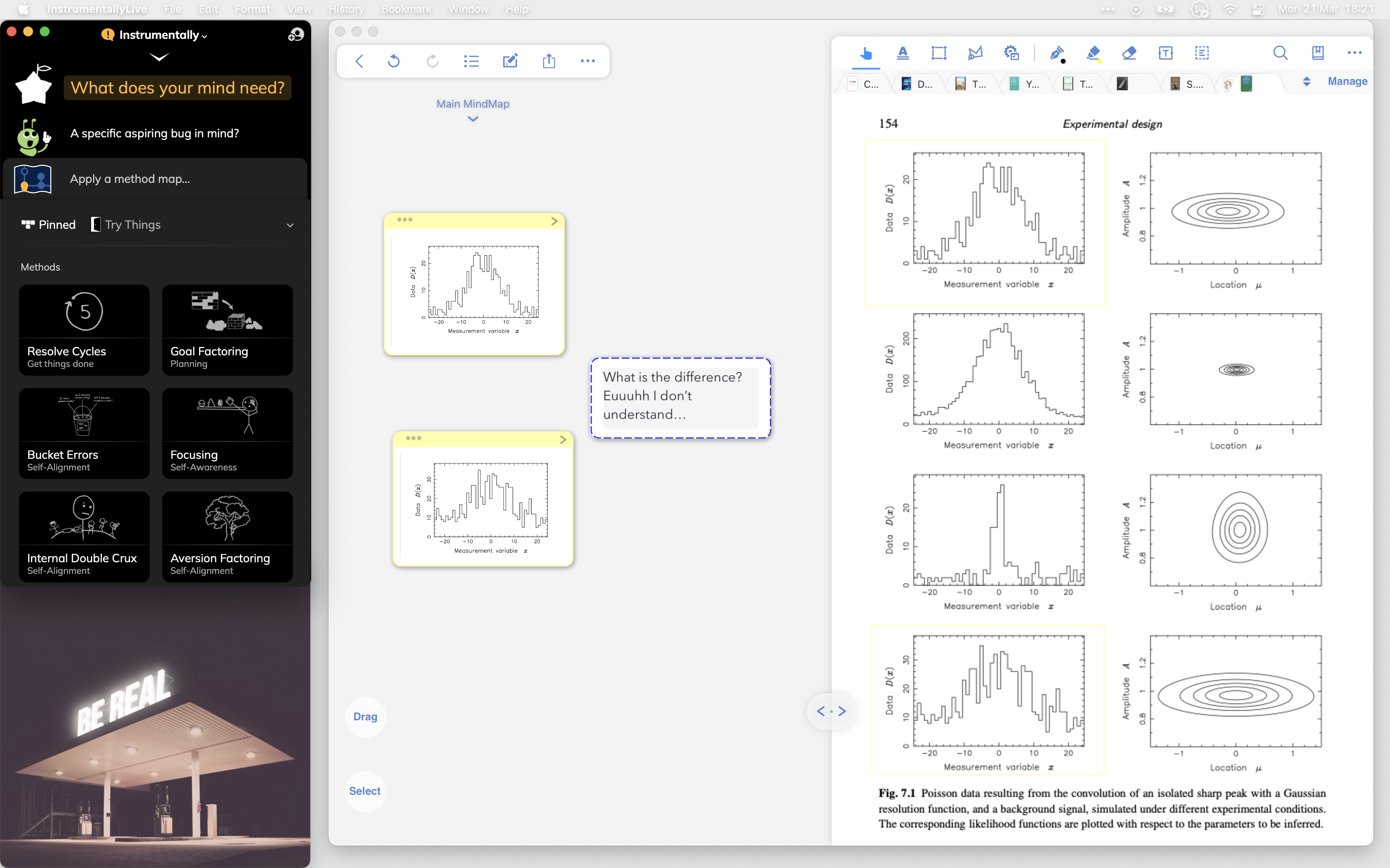

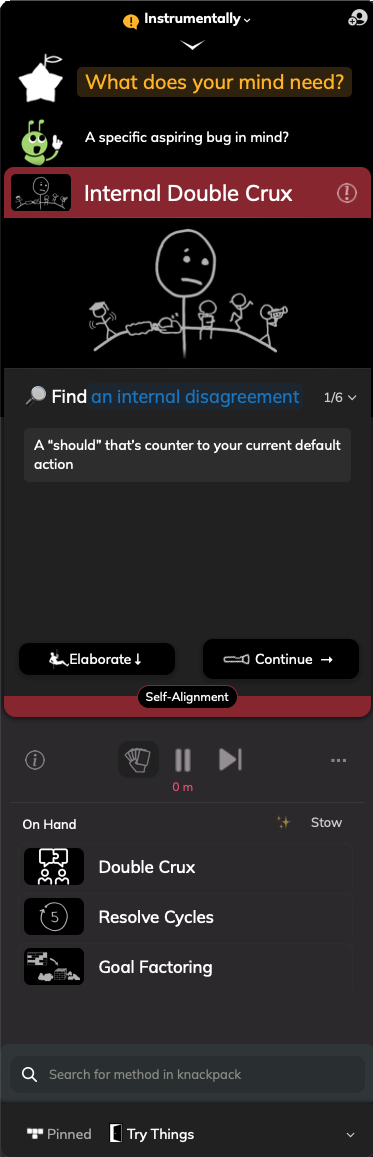

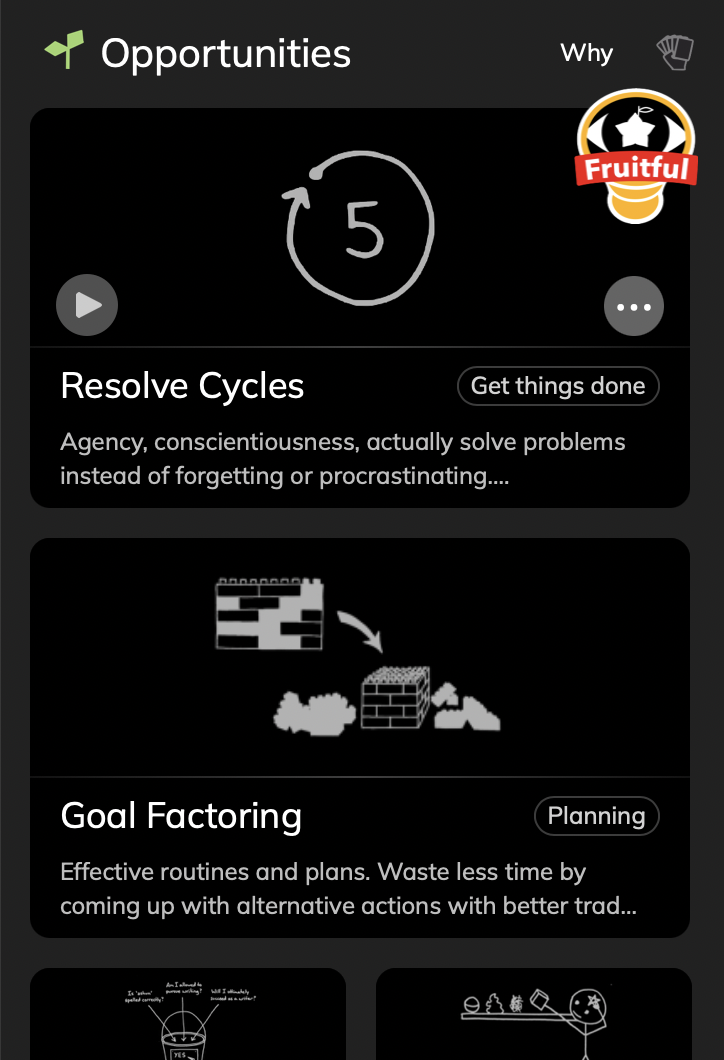

Instrumentally is currently a prototype software tool for desktop computers exploring ways to aid you to more reliably apply rationality methods while working with powerful tools on your computer.

The name Instrumentally as in aspiring to act more instrumentally, referring to instrumental rationality. Instrumentally has two chunks, Instrument and ally, to indicate a focus on using software tools that enhance human capability and doing so together with an ally.

In case you're curious, here is a more thorough

explanation of the reasoning behind the name

.

What you use Instrumentally for

As your rationality sidebar Instrumentally stays close at hand to help you model and self-monitor cognitive processes as you grow reliable rationality routines.

Instrumentally stays visible in your working environment to remind you to attend to actually using the rationality informed methods you've discovered.

Try the Instrumentally Prototype

Your Rationality Sidebar

Invite an ally to reinforce your growth

Balance mental needs for long-term growth

Focus on small specific problems

Choose a method to work on the problem

Recall steps more easily with visuals.

Stay focused with a visual reminder.

Take action inspired by prompts on the method mini-map

(powerful method overview mode will be added later)

Launch your instruments via

verb buttons

View method info and other meta actions

Switch between method hunches

Explore additional methods

"The wrong way to understand a system is to talk about it, to describe it, the right way to understand it is to get in there, model it and explore it, you can't do that in words. So what we have is that people are using these very old tools, people are explaining and convincing through reasoning and rhetoric, instead of the newer tools of evidence and explorable models."

While it is not required, I recommend that you explore the

prototype

(in another browser window) at the same time as you explore this section.

It might complement your understanding as you read the explanations here.

Optional character icons

This character refers to the book

The Elephant in the Brain

and is a reminder to be honest, with self-compassion, about your goals/metrics and motivations, and to update them toward truth and long-term value.

You can turn off characters like this in the application settings if they don't match your personal identities or your model of a productive environment.

Maybe you'll like them more if you read up on their connections to rationality by

browsing the icons and their definitions

.

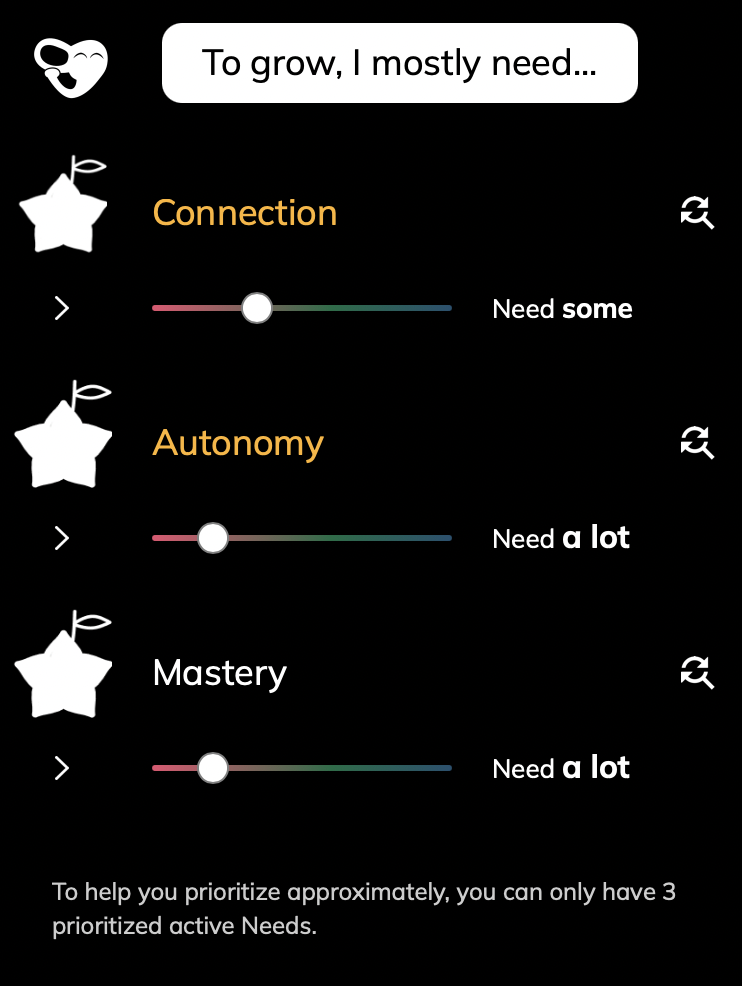

Visually explore dynamic evidence based models of mental needs, like browsing

lego parts spread out on the floor

.

Take care of your mental needs in a more delightful way, with

aspiring rational self-compassion

combined with a

scout mindset

.

Consider your priorities for long-term growth, by modeling your current top mental needs.

Balance marginal gains of mental needs to stay reliable.

Take care of specific improvement opportunities bugging you, either

during

work and life projects or in dedicated practice sessions.

Work on projects that are meaningful to You now, and update aspirations as you grow.

Receive aid in choosing good rationality informed methods. Train your tacit knowledge to pick the best fit among the top results.

Spend more time performing methods, less time searching for good methods.

Quickly launch rationality informed methods to help you take care of improvement opportunities that are bugging you.

Make better decisions by more reliably using better methods during your modeling and problem solving.

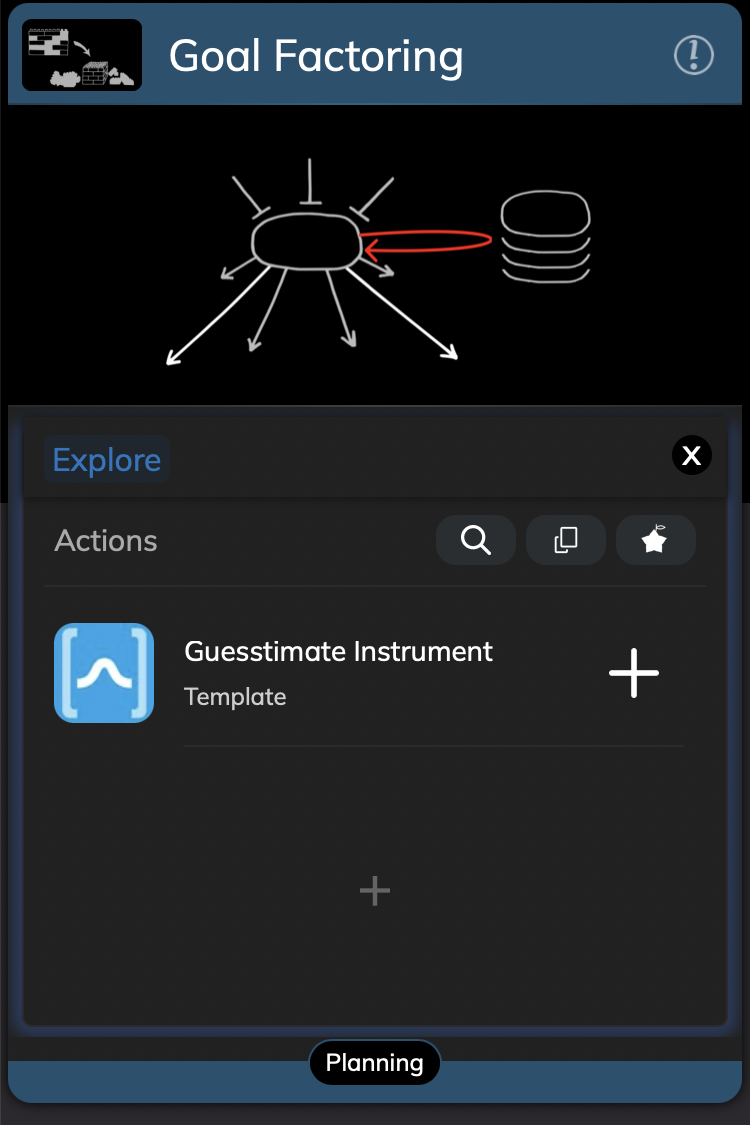

To explore/model and solve problems, use rationality informed methods together with the power of your whole computer.

By using rationality informed methods together with professional apps like e.g. MarginNote, Muse, Guesstimate and programming, instead of restricting yourself to a

monolith application

with lowest common denominator functionality quality.

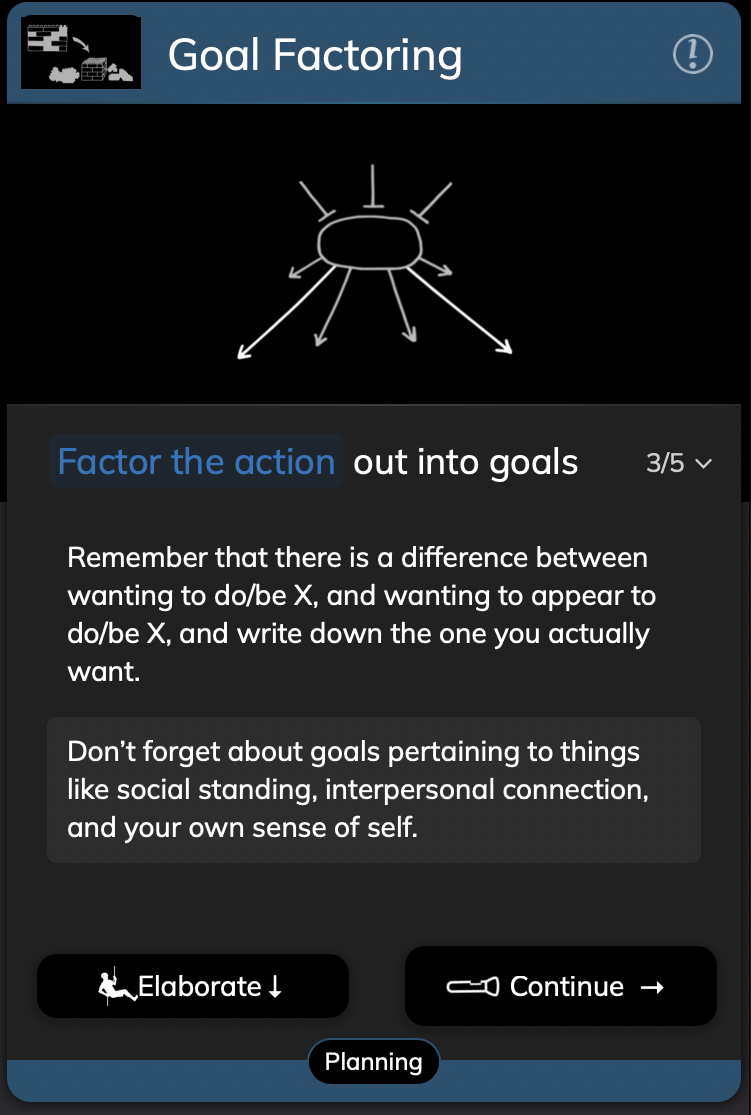

The method mini-map show prompts in smart text including rationality verb affordances to aid your navigation in your knowledge work explorations.

Importantly, problem solving isn't powerful enough if you

follow linear recipés

.

Consider the mini-map/debugger a first step, you also need to be able to open a more powerful view of the method map for an overview in which you can use method modules to flexibly compose your course on the go (kind of like a

roguelike game

) as you are orienting your process and solving problems.

Method Maps are just one type of instrument in your knackpack.

From methods you can access action palettes and quickly launch powerful instruments on your computer which are effective for specific rationality verbs, like doing modeling and problems solving. Even launch instruments at different template starting points and then adjust your models from there with flexibility and power.

Practice and perform at your pace.

Seek the sweet spot of challenge for deliberate practice to learn effectively

, but also spend time in flow to keep your motivation to make efforts reliable for the long-haul.

In-context, receive help in understanding whether to and how to use a method, by rich media examples.

Taking procedural action is the focus activity, but exploring the reason behind a method is also important, it just doesn't have to be a part of the method each time you use it

(tutorials are no fun).

Effective modeling and creative problem solving isn't a linear process. Flexibly switch between methods, compose your course as you go.

Invite allies to pratice and solve problems with you, for support and empowerment. Take turns!

Reflect to gain insight on how to improve your process. Perhaps analyze a screenrecording to aid your memory. Perhaps even together with a supporting ally for a bigger collective pool of attention.

Celebrate many tiny healthy steps of improving your process.

Gain agency / grit to use for growing yourself and the world long-term.

For more details check out the

Field Guide

Expectation Requests

I consider the progress so far among early steps in the research for better applied rationality interfaces,

something a little more concrete which we can explore further and build on.

I do not claim to have found the right ways of doing applied rationality interfaces yet, but through actually trying to improve the design I've found a few possible directions.

This is a starting point, I've already discovered many aspects that could be improved in the prototype, but at some point you have to stop and show what you found so far.

People often mention the concept of a minimum viable product, but for long-term research launching a system as soon as possible isn't always the best approach;

premature scaling can stunt system iteration

.

Instrumentally might be developed for production later with potentially large revisions, but so far the prototype work has prioritized scouting for potential virtues of reliable rationality routine interfaces that are feasible in the short-term but can grow for the long-term.

Instrumentally is a research prototype aspiring to be a scholarshipping project.

Instead of describing design in words, I try to communicate it in a less compressed way by using example prototypes to express principles.

The prototype doesn't fulfill all principles yet, good design takes time and a community of improvers.

Scholarshipping

is to ground a design in scholarly knowledge, while also testing it in reality by shipping it for people to use and send feedback on.

Other similar framings include:

learning by modeling

,

insight-through-making

,

make to know

,

research through design

and

"jump in and figure it out"

.

The project doesn't take credit for the specific example methods and their images which are included in the prototype. In the prototype they're explicitly credited to the creators, which mostly is the Center for Applied Rationality (CFAR). The focus on this design research is on the format and environment you do the rationality informed methods within, not to create new method content.

Many core design decisions were heavily inspired by the principles taught at the CFAR workshop and in the CFAR handbook, such as systematization, OODA loops, "let you wants come alive", and a lot more.

I do not claim to have succeeded in creating a design that fulfills the CFAR principles, I just want to try to contribute a little bit of progress by making an example design to communicate with, to improve further.

If CFAR or somebody else could learn anything from the design when creating a better one, I've succeeded.

Outputs of the project so far

Sharing Scholarship

Website for the Instrumentally project

Interface field guide showing Instrumentally's capabilities

This research article you're exploring

Digital Garden, explorable open research

Iterations on low fidelity prototypes up to current design and beyond. (Described below)

Slyce (figma prototype)

Before I found

Muse

and before I decided I didn't want to make another

monolith application

, I made this prototype as an exploration of how to bring a system for mental methods/processes

in-context/inside of a professional modeling tool.

This might impact future development of Instrumentally even if much isn't included in the current prototype.

Aspiring

Shipping

Try the Instrumentally Prototype

Your Rationality Sidebar

Invite an ally to reinforce your growth

Balance mental needs for long-term growth

Focus on small specific problems

Choose a method to work on the problem

Recall steps more easily with visuals.

Stay focused with a visual reminder.

Take action inspired by prompts on the method mini-map

(powerful method overview mode will be added later)

Launch your instruments via

verb buttons

View method info and other meta actions

Switch between method hunches

Explore additional methods

Instrumentally Tunes

Why music?

As a former composer (or music modeler) I make music as a hobby sometimes, and I thought some people might appreciate the music (at least if it is more optimized) as part of my aspirations of making honest, truth-seeking yet enjoyable marketing for Instrumentally.

Enjoyability is a sub-factor to

accessibility, which is separate from robustness and importance

. Statistically enjoyability might correlate fairly strongly with low robustness and/or low importance, but enjoyability doesn't cause either low robustness or low importance.

Music as advertisement has been done successfully in e.g. popular game projects like

Super Mario Odyssey

,

League of Legends

and

Pokemon

Music can be used as

temptation bundling

by queueing just a few songs to help you get started working on your

ugh fields

.

This section is still early, need to write out fair

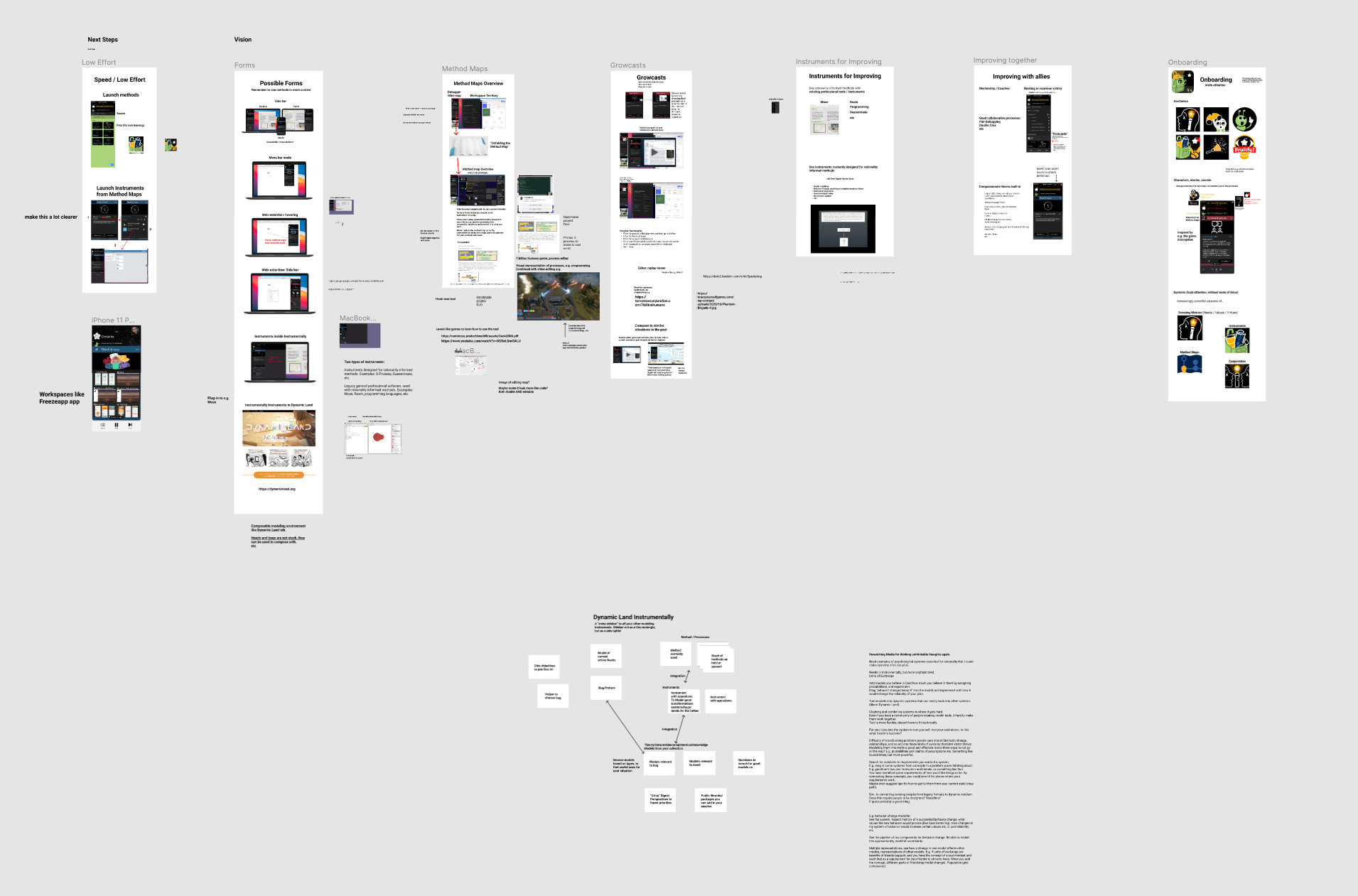

What Instrumentally could become

In addition to building the prototype, a big part of this project has been exploring and combining principles to build a vision of what a medium-term future of reliable rationality routines could look like.

Instrumentally aspires to continue to take a scholarshipping approach moving forward as well.

This includes doing research for design, research to find the most relevant theory/conceptual tools to use to build Instrumentally more relible and robust.

It also involves the shipping of the software, creating a service that people actually use both so the development can gain real world feedback/data, but also to provide real world utility.

Initially the focus is on providing help to aspiring effective altruists who persist in strides trying to improve the world. But over time Instrumentally is open to optimize for a more general public.

Steven Pinker recently said

that rationality, as he defines it, would benefit everyone, but that it's just not broadly seen as 'cool' yet.

Instrumentally might not be desirable by the most general public, but that is ok. The world has room for at least a few alternatives that optimize for different sections of the population, this is probably more effective than to create one lowest common denominator design for everyone anyway.

Potential future directions

Paraphrazing Bret Victor

: The features aren't the point, the point is the capabilities a design empowers users with.

Prioritizing the functionality of an environment is really important.

Many projects don't take their time to find a great version of a design, but rush to create news for users.

With Instrumentally we take a more long-term approach, aspiring for high quality design.

Premature scaling can stunt system iteration

.

Possibilities

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Improvement Opportunities

This section is still early, need to write out fair

There are a lot of possible paths of research toward improving instruments and infrastructure for advancing applied rationality.

This is not a high-level overview of those, for more on that see e.g.

Advancing Wisdom / Intelligence by Ozzie Gooen

.

While artificial general intelligence (AGI) and brain-computer interfaces (BCI) are exciting areas too

, those areas are not in scope of this article.

Nielsen and Carter concludes

that AI and cognitive technologies can make a virtuos feedback cycle, improving each other.

Here the focus is on interface design that empower people to more reliably apply rationality informed methods.

In the opening section rationality verbs for exploring, maintaining/practicing, applying and sharing were introduced.

If aspiring learners could understand the models and processes of experts more effectively, they could more quickly gain that expertise for themselves.

The flow of process in knowledge work is to a large extent hidden. If we added capabilities of showing the process to tool interfaces for modeling systems relevant to expertise, then we might empower more people to do better and more valuable work.

Some Virtues of Reliably Applied Rationality Interfaces

Capability enhancement / Empowerment

.

Enhancing capability for poeple to do what they want to be able to do.

Lower cost of useful behaviors, true better tradeoff.

Can be simple at first, but open for full power when you need it (without having to completely switch to different software/programming language, etc

Rational calibrated motivation isn't to put more energy toward useful but hard things, it's to make it easier to do useful things so they become more reliable.

Calibrate understanding of systems

Emergent behavior of a modeling system: Reader getting more out of the material than the designer put into it. Make things the tool maker couldn't think of.

Agency / Motivation without self-deception

.

Incentivize action, but allow the user to choose what actions best fit their priorities.

Motivation without self-deception refering to Julia Galef's book The Scout Mindset.

Encourage learners of all skill levels to grow. Win-win of learning, not competition in improving the world.

Enjoyable / Desirable (for good reasons) / Yummy

.

Behavioral design to incentivize actions that actually bring value.

Control of updates, update when best for you.

Familiarity is important for reliability. An environment that changes all the time can disrupt good habits.

There is a fine balance between updating to stay effective, yet not force creation of new habits, maybe at a time that wasn't a good fit for the user.

Flexibility / Diversity

/ Autonomy /

Customizable, so you can edit to your preferences (which may change over time) (" Adjusting your seat " CFAR).

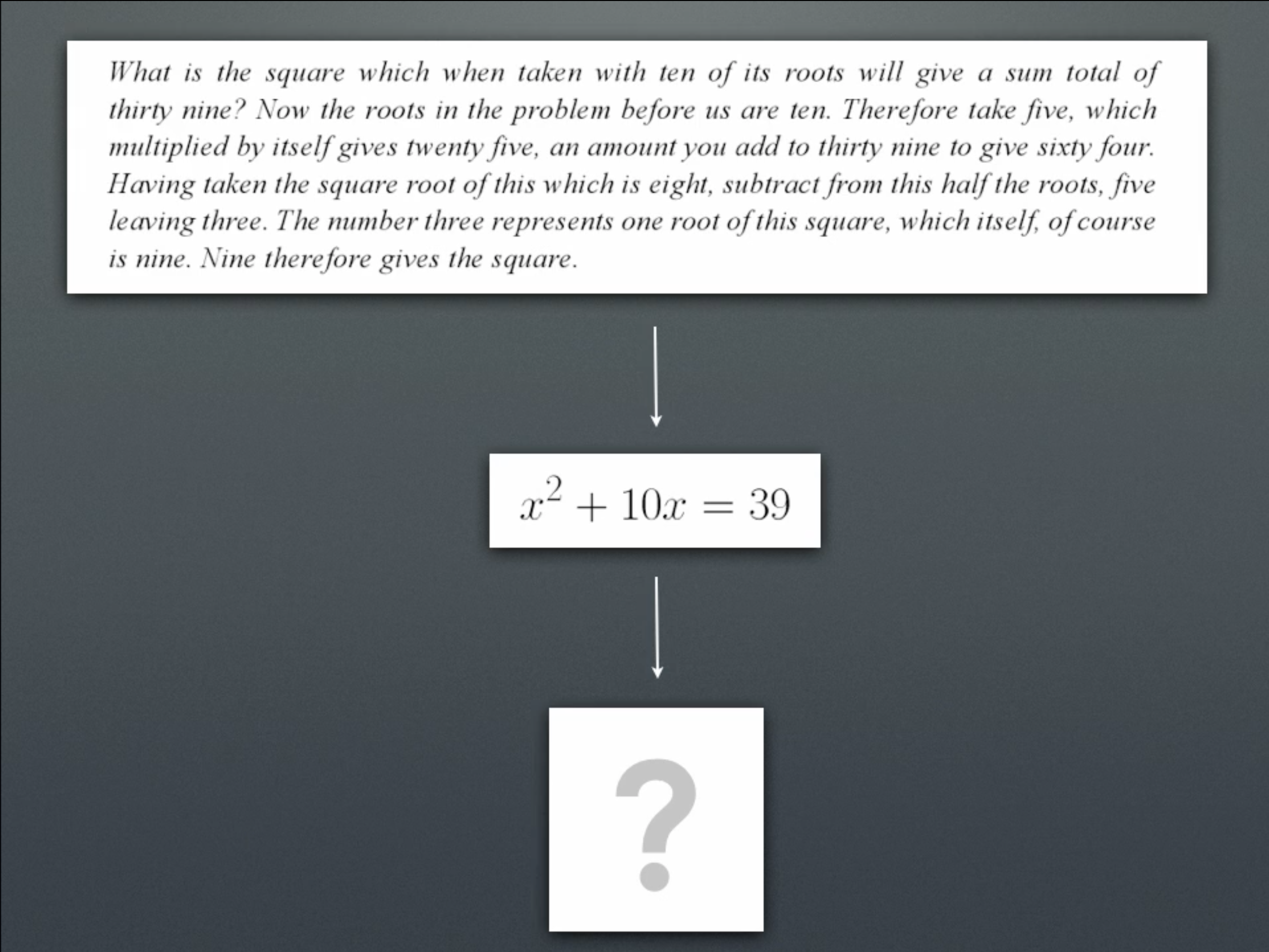

Start modeling from different starting points depending on which kind of information you have, e.g. like math problems.

Set your own constraints, more options isn't always good either.

Eventually End-user programming. Dynamic Land.

Encourages you setting up goals personally you're motivated by currently and incrementally update those to be goals that motivate you without self-deception. E.g. integration with Beeminder with tips on how to do it, which parameters to experiment with etc.

Allowing for expert process creative problem solving.

Escape hatch if you need full freedom

. Experts that are exploring need to be able to express new ideas. Systems and instruments can sometimes limit this, at least in their growth path toward greater power.

However, you can design systems and instruments to allow experts to more easily escape their constraints when they need to, while still benefiting by the instruments when they don't need more freedom.

Personalization

,

adjust the parameters to your specifics instead of other-optimizing.

Themes.

Favourite methods, need models, etc.

Choose your client. E.g. email or greaterwrong.com vs lesswrong.com

Skip story if you're mostly there for the systems, like in games.

Efficency

,

Speed matters, tools shouldn't slow you down.

Excise reduces probably of doing the behavior.

Could assist brain, not limit it.

Good Citizen Software: integratable with other tools / clients, and exportable.

"Contextual Computing"

Dynamic Land operating system Realtalk.

Availability

,

Works on essential platforms (aspiring to do eventually) so you have access to it wherever you are (although sim. to Muse it's not good to try to make common denominator design, different platforms are good at different things.

Low cost maintenance

. Things like spaced repetition inspired scheduling help of when to update skills, tools, methods, needs, etc.

Community recommended best practices, spend less time exploring for good enough alternatives.

Spaced Repetition-like scheduling help to prioritize the right skills to maintain, enhance etc

Longevity, data usable, not have to switch tools and lose data, etc.

Learnability

, not effortless but with aid to learn complex powerful skills.

Start with the concrete, examples. A community of role models creating models to learn from.

Support & Challenge. Psychological flexibility and dissent.

Developmental perspective.

You should be able to do something you care about. Update aspirations as you grow.

Optimize for intermediate/expert, not beginners. But can add extra to help beginners.

Scalable, post-scarcity

.

Possible to use by many people, while still keeping

direction of culture well-steered

.

Software allows for this, especially free software.

However, software won't solve all problems, in some ways similar to how dating apps have a hard time find you a perfect partner, some richness of information might get lost compared to e.g. a workshop.

Local and online.

Diversity, multiple local groups and multiple online groups.

Dynamic land, local/social and dynamic software.

If you feel stuck in one, recall that you're allowed to take the escape hatch from that incompatibality thinking.

Reinforcements / Support

.

Cooperation / Coordination / Collaboration,

Not just being able to work together, design with theory of group psychology, work psychology, rationality of working in groups, forecasting crowds, and more in consideration.

Help

Openness / Sharing / Privacy balance

.

Spend a sample of your time to aid others in their aspirations for more reliable rationality routines.

Open/Explorable Design Robustness

, Quality Epistemics, e.g. community / market.

Scout mindset, not overselling or motivating people to use it for bad reasons.

Credible

: Trust the design (for good reasons)

Accessibility

Free Access, see RAIN framework Ozzie, Less Scarcity

Findable

: highest expected utility actions most visible etc.

Find by recognition, not only recall (search)

Your library for procedures.

Can be multiple competing apps providing a good library, but should be designed like the user uses one mostly, to simplify and help encourage the habit of actually using it.

Spotify/Apple Music a library for music.

Papers a library for scientific papers.

At some point quality becomes more important than quantity, in part because it is easier to reliably use and maintain a few good things.

Measurable

.

Let people make their own tests and metrics and experiment to learn.

Explore more design principles and ideas in the Digital Garden Research

And more factors I've either not explored yet, forgotten or doesn't seem important enough...

Do you have an ideal you want me to add to the list, or just a different perspective of ideals?

Your feedback is welcome, so we can improve:

To try to accurately evaluate the current infrastructure (Clearer Thinking, CFAR and similar) would be hard to do fairly since I don't have information on their exact goals and future plans.

I realize it would be easier to understand if I gave concrete examples of current infrastructure and concrete examples of how a improved design would fulfill more of the virtues above.

I do not wish to create actual-ideal gaps that would make people not want to use the current infrastructure, we need to satisfice to reliably apply, but also search for better design.

However I want to show a gap big enough for some people to care about creating better design.

Technology changes constraints

, John Wentworth explains.

Humans have bounded rationality

, but we could push our boundaries further with the help of well engineered systems.

It is daunting to try since the challenge is so large, but it would be a shame to let the perfect be the enemy of the good and don't try.

Aspiring cutting-edge-capable people could build reliable rationality routine systems with dynamic visual interfaces composing with tactile conceptual tool

To have good judgment is considered a

top priority trait in the aspiring Effective Altruism community.

Consider human judgment as a system.

In his talk

"Seeing Spaces"

Bret Victor presents why seeing systems is important.

He talks about systems in general, but something not mentioned is the mental systems of the people working on modeling something, and the systems of their collaboration.

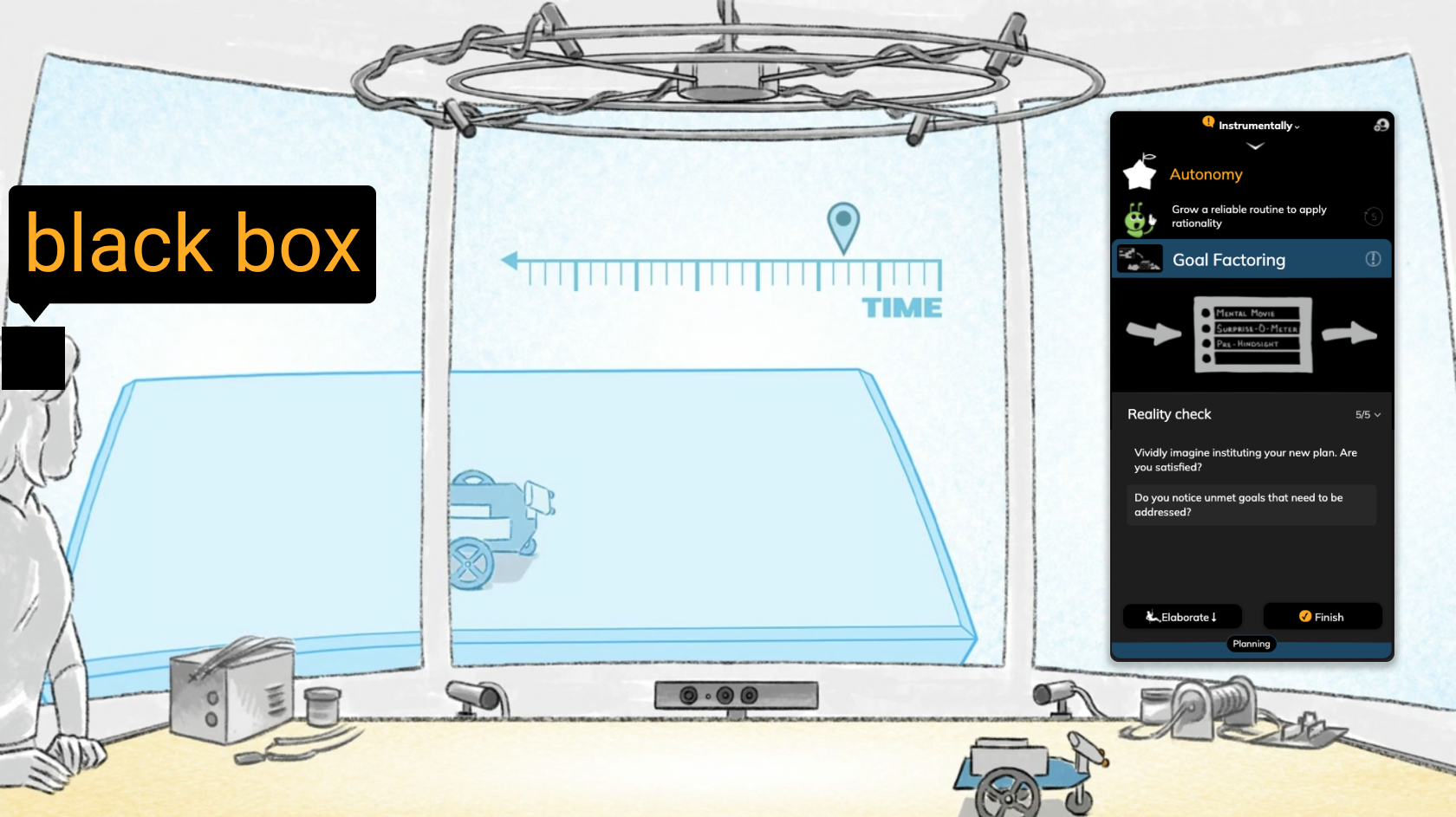

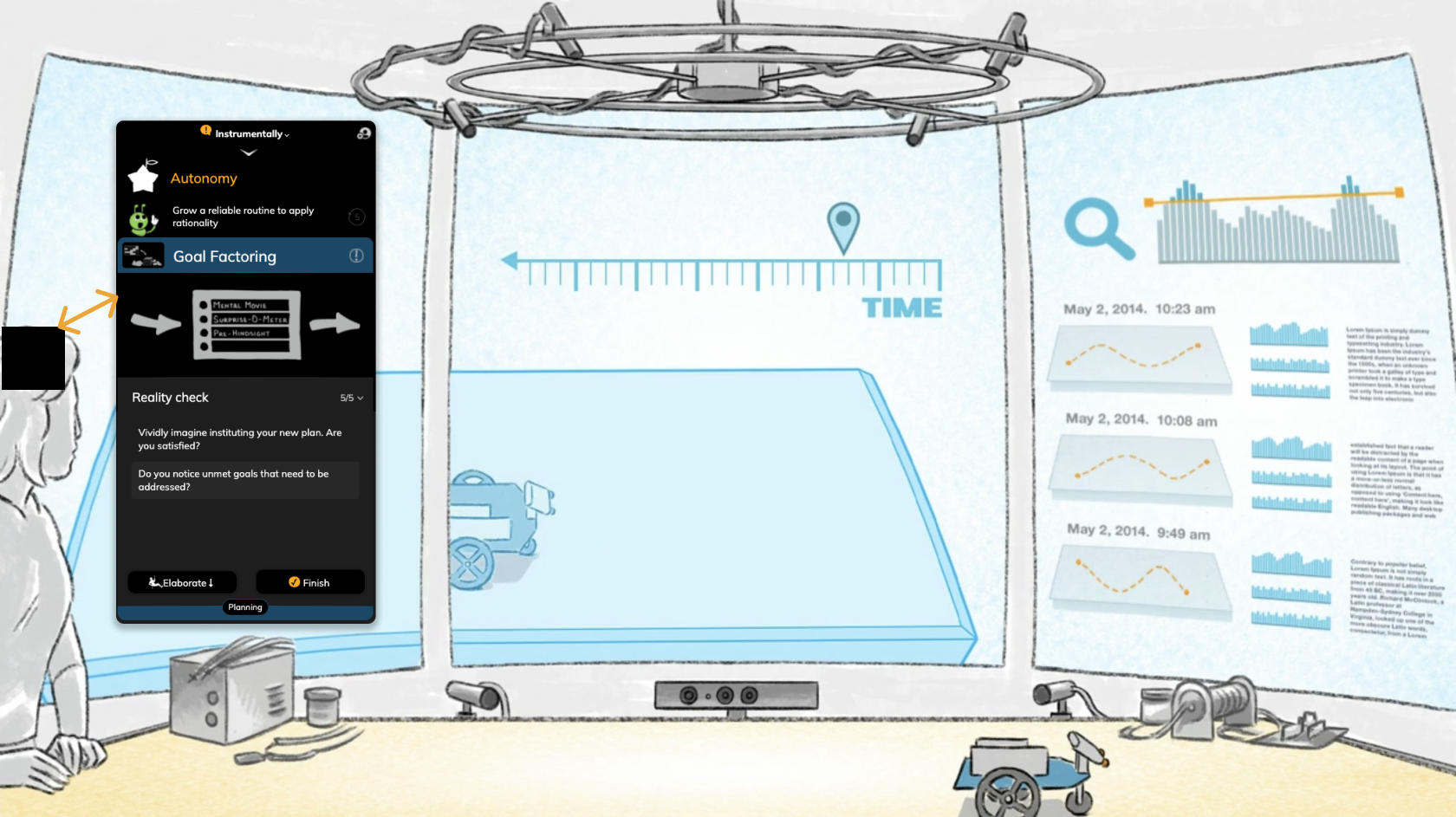

Seeing spaces

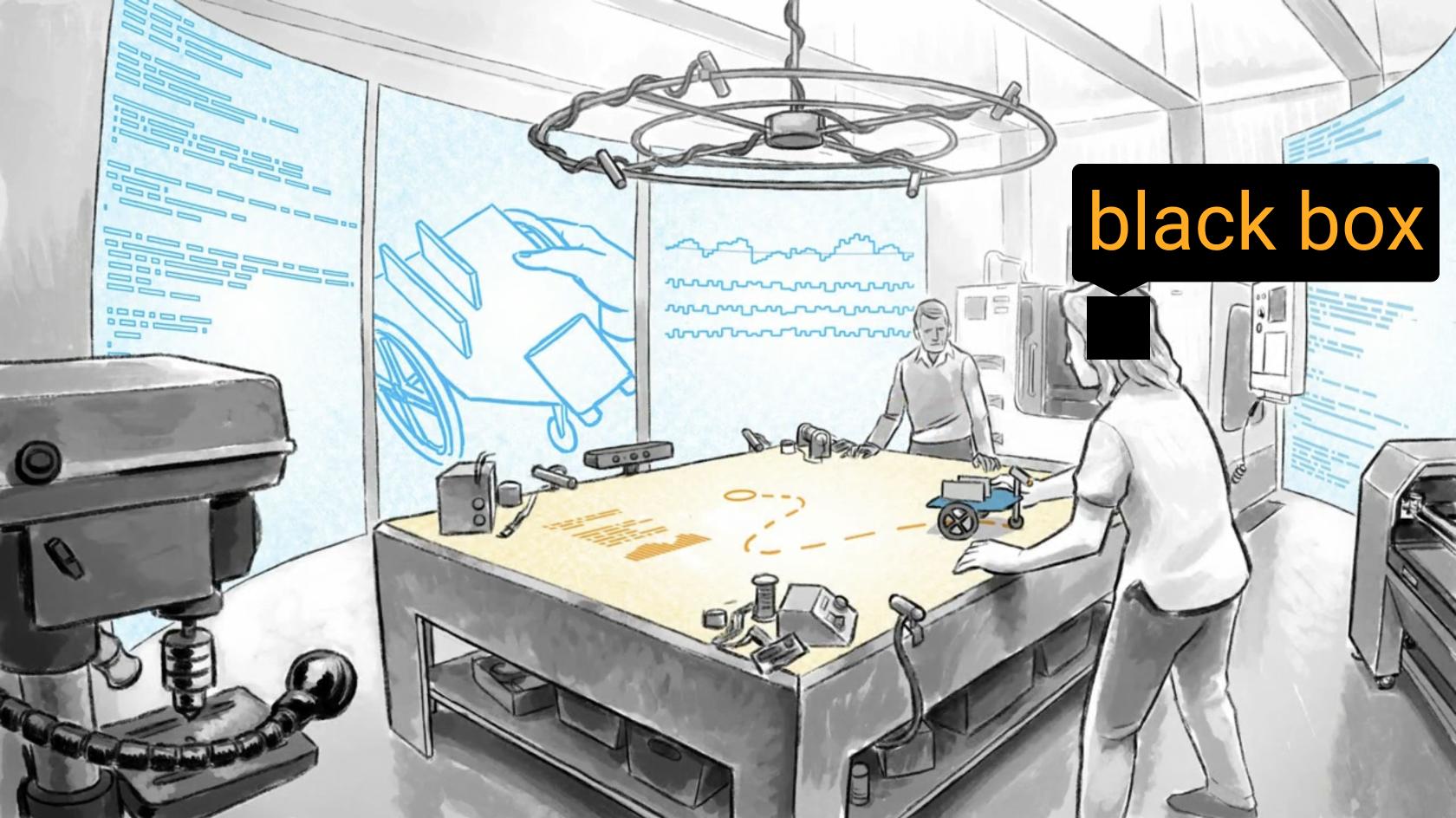

All the following images are borrowed from Bret Victor's excellent talk on Seeing Spaces.

David Hellman creates such enjoyable yet clear art and I thought that rather than making my own images it would be clearer to build upon this great work by adding some

annotations

on top of it.

These psychological systems effect the effectiveness of the modeling.

We don't just need

Seeing Spaces

to see systems we're modeling, we need to see the mental systems we're using in our process of modeling, we need interfaces for

Seeing Sense Stridings

.

Sense

as in mind, wisdom, judgment, understanding, intelligence, awareness, perceptivness, intuition.

Stridings

as in regular pace/rate of progress/development, doing something difficult/effortful.

Hit one's stride: To achieve a steady, effective pace. To attain a maximum level of competence.

Take in stride: To cope with calmly, without interrupting one's normal routine.

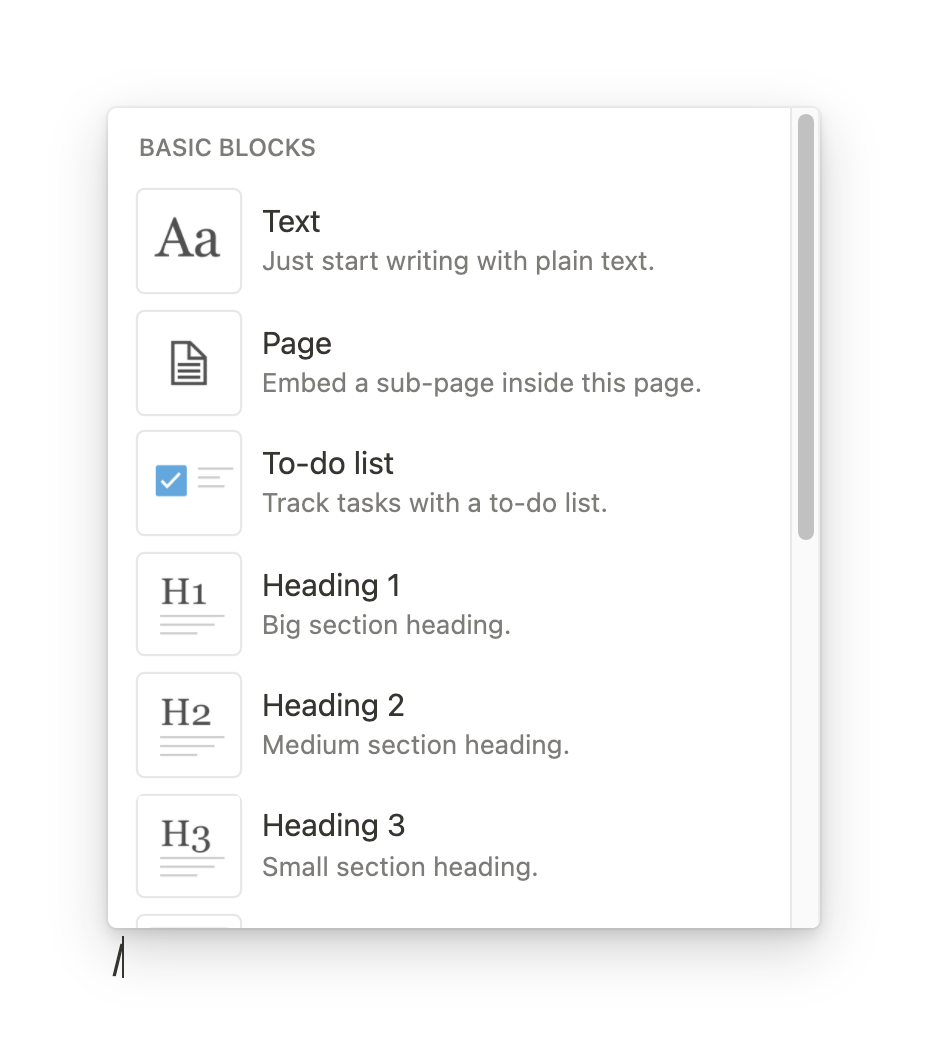

Notion Blocks

The idea of blocks in Notion makes capabilities visible, you just press "/" and get to see a list of possible parts and operations.

Imagine this but for launching dynamic models relevant to your rationality informed methods.

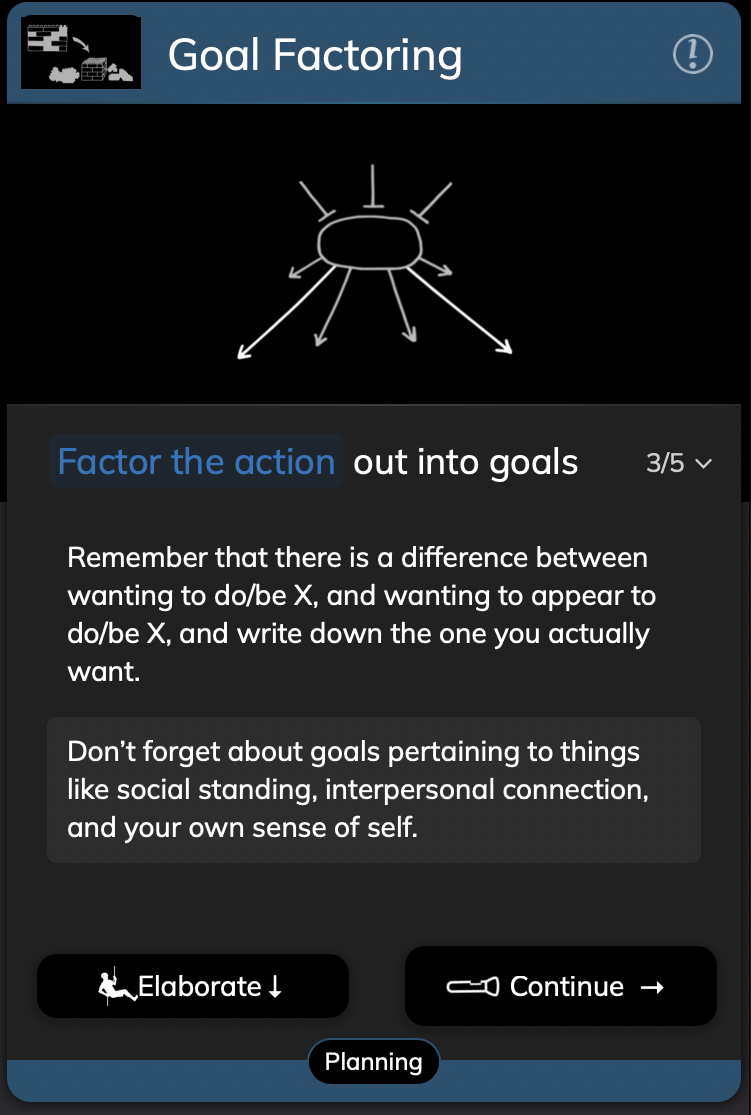

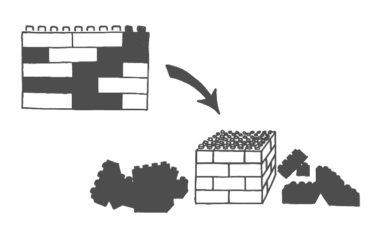

Goal Factoring

The concept and the image is from the CFAR Handbook.

A common metaphor in the CFAR Handbook is lego parts. Break up concepts into smaller parts to understand the gears of a system.

Inside out

Yes, the movie Inside Out isn't accurate in all of its metaphors.

The metaphor of the mental control room is fairly close to Bret Victor's

seeing spaces

.

While we can't yet put sensors on our brain that generate accurate data on these kinds of systems, if we consider a seeing sense stridings space where the users model their mental systems via incremental communication with their black box brain through experiments, focusing etc.

Build systems that show mental systems models

Show affordances of

available actions

to update mental system parameters with.

Let users explore and update the parameters.

With a combination of directed and undirected play.

Design could help providing robust yet accessible conceptual tools for users to model their short and long-term needs with.

In his talk

"Seeing Spaces"

Bret Victor also suggest three important aspects of seeing:

being able to see inside a system, see across time of a system and see across possibilities of systems.

Being able to see the process of cognitive algorithms used, the methods, we'd get feedback to deeply understand these systems and then more easily update them for the better.

We want the information that lead to an action, not just the action

.

The function, not just the output

.

Showing process as part of the proof that our process works effectively.

With risk of sounding like a broken record:

"The wrong way to understand a system is to talk about it, to describe it, the right way to understand it is to get in there, model it and explore it, you can't do that in words. So what we have is that people are using these very old tools, people are explaining and convincing through reasoning and rhetoric, instead of the newer tools of evidence and explorable models."

Not just evidence and explorable models of specific work, but of people and their judgment.

Forecasting let you do this a little bit, forcing people to be more accurate with their beliefs.

But we need evidence for process too, and the current best way of this is to show screencasts, save replays.

LessWrong positions

world modeling

as a central category, next to e.g. world optimization.

Part of world modeling is modeling our own mental systems. The way we do this today varies, some people might use powerful tools to think with, others mostly keep it inside their head. Process information is mostly hidden and unclear, a black box.

When we communicate these models to each other we use mostly textual representations, a lossy compression of the rich mental models we have in our heads.

Models about rationality process could be made visible and more practical to use to clearly communicate, but also to help us with other rationality verbs such as e.g.

aligning motivation to truth

,

supporting each other

and redesigning ourselves with conceptual tools for self-directed

behavior change

.

Assign a part of your environment for your

rationality sidebar, self-monitor

.

It doesn't have sensors from your mental systems, but it shows you affordances of methods to update/transform your psychological systems to into better balanced states where they more effectively can help you get what you need in this situation.

Self-monitoring is considered an important skill in the aspiring Effective Altruism community,

yet even though monitor is in the name, we tend to try to simulate this using only our minds, not enhance the process with seeing tools like visual monitors that can help us model, show and test the psychological process systems we're trying to monitor against.

To effectively improve our judgment

while

building/modeling/updating systems, we need some meta tools to understand our process, our methods, our current aspirations, our short-term needs (like e.g. equanimity, autonomy, fairness, relatedness) to help us find balance, gain agency and make better decisions.

Attention is limited, it is inefficient to simultaneously try to multitask self-monitoring AND thinking about a complex modeling challenge at hand.

This is one reason why collaboration is useful, the pool of attention grows, allowing for more feedback.

Offloading some of the information out of our heads, into media helps too. Being able to quickly rewind time, to see feedback and evaluate your performance so you can adapt is very valuable for

deliberate practice

.

A visual monitor assigned to be a "self-monitor" to help you do self-monitoring of your psychological systems, to see how their processes flow.

Of course this wouldn't be an app like this in a seeing space, but something more integrated.

In the short to medium-term an app-version for a desktop computer is more feasible, but

the key point is that a dynamic interface for rationality systems could be a visible part of your environment.

“Search ... find sessions in the past that meet that condition, and you just go in there and figure out what was going on.”

, but for data on your systems for practicing rationality. E.g. when did I work on a similar problem in the past? What rationality informed methods worked when I was really low on equanimity? And so on...

Collaboration

Show your process and your needs, so collaborators can understand and support you.

In a learner's early developmental stages they're advised to have a mentor with them to guide their deliberate practice.

But over time it becomes the learner's own responsibility to be able to give themselves feedback to continue to grow independently.

This makes effective learning more scalable, as you don't need as much costly coordination to create effective learning environments.

It isn't a replacement of the mentor/teacher/coach, but an aid for your hone-work.

Being able to see inside, see across time and see across possibilities of these meta systems of psychology and rationality would be helpful to improve these potential capabilities.

Seeing across time and possibilities is kind of what developmental psychology is about.

Stereotypes come from not being able to see properly.

It's not that we're bad people for stereotyping, it's just hard to percieve and appreciate "invisible" traits.

Part of a good process for cooperation is to have a growth mindset for yourself but also for other people.

With the systems I'm describing we could more clearly see possibilities of what other people could grow into even if they're not signaling sufficient skill level right now, we could see and value their continous process of improving.

We could consider possible causes of their behavior and consider rationally compassionate ones more easily.

With better systems for modeling rationality relevant models, we could more quickly model what we need based in best available theory and request support to receive what we need from others.

We could see other people's process across time, see their efforts to improve, support them on the metrics they currently care about.

Dynamic models of epistemics/conceptual tools as packages to import and use in modeling.

Chaim Gingold research

One of the design principles distilled.

Advancing the field of Applied Rationality Interfaces

Applied rationality is still a young field, and a focus on the subset of systems for reliably applied rationality routines is even younger. No textbook has been written on the topics yet.

"What about when you're working in a domain where the textbooks haven't been written yet?

That's when we start moving" ... "towards the more

scientific way of thinking, where you're discovering and codifying those underlying principles

, or you're generating that theory that engineering relies on.

This is incredibly powerful because

this is how we explore and

map out new territory

, this is how you build new kinds of things and

understand them deeply enough to build

them

reliably and robustly

."

This project is among early steps for building theory on how to build reliable applied rationality routine interfaces.

The theory is improved partially by making prototypes, but the potential products could be a lot better in the future as we figure out how to fit the design to more virtues and theory.

To build reliable and robust systems for reliable rationality routines that grow and maintain good judgment minds we need to understand the systems deeply by being able to see what's what we're doing.

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Potential Utility

There are a lot of opportunities to improve the world, but if we want to have the most impact we need to explore to calibrate our judgment for high expected value projects, prioritize and then align our motivations to truth and pursue those projects.

These are some cause areas of high priority

.

This project aspires to advance our knowledge on effective ways to design instruments for rationality methods to, in the short- and long-term, empower people in general but with focus on people working on the most important cause areas mentioned above.

Behavior empowerment projects like this are not ends in of themselves, but become important as they could be effectiveness multipliers to work on

important causes

.

Community judgments pointing in a similar direction

As a proxy to compactly communicate robustness / good judgment

, here are some more authorative community judgment recommendations that I consider this research project is significantly related to.

Among the top cause areas (as of writing this)

Building capacity to explore and solve problems

Improving individual reasoning or cognition

Building Effective Altruism

Improving institutional decision-making

Direction of good judgment aspiring Effective Altruists

Advancing Wisdom / Intelligence, by Ozzie Gooen

"Rationality-related research, marketing, community"

"Tools for Thought"

, including collaboration.

"Education innovations"

I think this research article could potentially inform prioritization research on advancing wisdom.

Behavioral design capacity is critical but less discussed in the Effective Altruism movement, according to Spencer Greenberg.

Ten Conditions for Change

As agency to apply knowledge approaches -> 0, knowledge isn't worth much.

Improved design could potentially make agency to apply knowledge significantly higher for more people.

Lightcone Infrastructure

: "This century is critical for humanity. We build tech, infrastructure, and community to navigate it."

Experts in Interface Design

Ink & Switch

Muse team

Future of Coding

About Face

and more...

Traction of complementary projects

There are already a few projects working on instrument and infrastructure gaps similar to the one's I've described above:

Clearer Thinking

CFAR

LessWrong

Effective Self-Help

Training for Good

I am seriously uncomfortable disagreeing / giving unsolicited feedback to these beloved organizations.

It is my intent to try hard to be a principled dissenter.

There is a lot of uncertainty in this still nacant design space and I do not claim to have found proven better designs.

Still, from my scholarshipping I've at least gotten a hunch of possibly tractable directions of building more effective instruments and infrastructure that I want to share and discuss.

Since there is a lack of public openess in these organizations and scarcity of slots to join, I've done independent research using my own savings. In science there are seldomly just one university that has a research team on a topic, so for diversity I thought I'd try to make my own

inside view

for applied rationality interfaces.

Steven Pinker recently said

that rationality, as he defines it, would benefit everyone, but that it's just not broadly seen as 'cool' yet.

I know from some Effective Altruism / LessWrong reviews of Pinker's recent books that the community doesn't always agree with Pinker, but I've yet to find good reasons not to make rationality more accessible, at least to most aspiring Effective Altruists (or adjacent) who are not yet convinced of the benefits of practicing rationality.

Your feedback is welcome, so we can improve:

Long-term Perspective

Many of the causes mentioned above are important both short and long-term.

Work on high quality rationality informed instrument designs might not become ready for a while, but might greatly improve our long-term expertise growth and could therefore be important long-term.

Eventually,

AI might outperform us

on this kind of expertise anyway. However, if we made better tools sooner which could empower people to more reliably work on e.g. creating safe AI, then that upgrade might be worth it to increase the odds of

AI Safety

, for example.

Have you scouted any key evidence for or against the project which is missing here?

Your feedback is welcome, so we can improve:

Theories of Change

Theory of Change

Definition

:

"A comprehensive description and illustration of how and why a desired change is expected to happen in a particular context."

This is sometimes used in the Effective Altruism community to improve accuracy and clarity.

There are many sub-factors of the top level values and cause areas to make progress on.

For the causes refered to above, one such sub-factor is expertise and capability, which has an instrumental value of more effectively creating capacity within the community so we can manage the tough challenges the cause areas present to us.

Here I define expertise approximately as a combination of domain knowledge in an important cause area and embodiment of rationality process skills.

More concrete paths to improve on these still big picture properties is discussed more in the Prototype Project-section.

This section is still early, need to write out fair

More

reliably

applied capability

People more reliably notice important patterns and more often actually apply good methods at those opportunities.

Motivation without self-deception is more likely, because people

TODO

More people

growing and maintaining cutting edge capability while working on

the more important causes

Brainstorm, need to distil

Improve your life with rationality, so you have more resources left to improve the world

Meaning is part of well-being, so once people learn this, they want to grow skill to contribute

Less arbitrary limiting us with false beliefs, easier paths to mastery

Effective Learning

Aligning motivation

People are able to update their personal fit to a larger extent even if it is really hard, since they have more powerful methods to cope and update themselves.

Shortcuts (goal factoring) toward good judgement, not just learn effectively, learn the right things.

Instruments empower us to have higher expertise, easier to become expert

Effective coping to calibrate aversions, so you can use more effective paths in spite of uncertainty

Scalable Support, true but rational compassionate perspectives to remain strongly motivated without self-deception

Availability, not just people already skilled, credentials etc, but anyone can learn and practice the skills. Post-scarcity

Personalized learning for novices <-> experts

Curse of knowledge

Scarcity of top performers -> Scarcity of people to update norms and give feedback to aspiring top performers.

We've found important causes, we need to effectively help people update themselves to work on the important causes.

Catch attention and hold attention: Rewards -> Social/stories -> Self-Improvement -> World-improvement

Scout mindset to update motivation (area under the curve), toward higher expected utility projects like 80k hours top areas.

Rationality skills make it more effective to learn domain specific skills and become domain expert

Risk: Coordination problem between all experts, but that's not bottleneck right now

Improved capabilities

to improve with

Push boundaries of expertise at the cutting edge of Deliberate Practice

Improved Instruments for Improving that enhance human capability further

Community of designers that update instruments for new best practices

By being explicit about methods, making designs, sharing with growcasts,

we make the community accountable of actually improving.

More concrete feedback whether methods work, so we can learn and update.

Better science (understand systems in the world) and better engineering (build systems in the world to create more value)

(

Media for Thinking the Unthinkable

,

Seeing Spaces

)

Processes important for creativity, insubordination

Processes important for creativity, insubordination

Media for Thinking the Unthinkable

Growcasts to be accountable, proof of process. Get feedback, but also support through challenge. Share process for others to learn from.

Artificial Intelligence: create and collaborate with AI to solve problems.

One key way to improve expertise is to collaborate...

Improved cooperation

For better judgments we need to understand systems related to judgment.

To effectively understand systems we need explorable dynamic models and modeling environments.

Effective Communication of psychological systems

Effective Communication of cooperation systems

Better individuals, better at scouting together

Methods/Processes you can see, evaluate, optimize for your needs. Agency and control.

Visible values of individuals so can optimize for merged value function

Trust by being open/transparent/honest/vulnerable.

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Summary and Conclusion

This section is still early, need to write out fair

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Acknowledgements

Feedback

Inspirations

Advisors over the course of the project:

Simon Koser

Advisors specifically for this article:

Simon Koser

,

Serge Solkatt

Support

Support over course of the project

TODO: link to website

Support specifically for this article:

Simon Koser, Simon Holm, Siri Helle, Siri Brolén, Erik Engelhardt, Henri Thunberg, Madeleine , Jonathan Salter, Thomas Ahlström

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

How you can contribute

Your feedback is welcome, so we can improve:

Any feedback you have is appreciated. Yet if you're confused what feedback I want or how to give it, I suggest reading this:

How to support well in research conversations

Here are some contribution idea prompts:

• Provide recommendations

. Do you know of better articles about the same topic, or related articles that could enrich the robustness of this article?

• Suggestion/referrals to people who think continuing this research would be valuable.

It is stressful to do the independent research without security of any income. Odds are that the research would make progress faster if I had more security.

• Do you think you or somebody you know could fit as a co-founder, please contact me.

My strength is in research and design, not in managing companies.

• Can you think of a goal factor (CFAR technique) of the design presented in this article that is superior?

• Using knowledge from cognitive science, rationality, human-computer interaction and other sciences, model better design alternatives that score even higher on the presented virtues for reliable rationality routines (and potentially other important virtues not presented). Build and share with the communities LessWrong and Effective Altruism!

• Want to design or build an Instrument for rationality methods?

See this

list of potentially promising Instruments for rationality methods to build

.

• Do you think there is a feasible way that AI could help with the obstacles of reliable rationality routines?

•

Study Bret Victor's research

carefully and estimate how important you think it could be and share the results on LessWrong or Effective Altruism Forum.

• Distill and communicate the most important information of this article better than I can.

• Help me improve this communication model however you think is best, or use e.g. these feedback prompts:

Come to think of any strong evidence for or against specific claims?

Describe your cruxes with the model

How likely would you be to recommend this to a friend if the vision above came true?

Rate the article based on the

RAIN Framework

scoring and suggest possible ways to improve the article on the factors. Any certain parts that bring down the average a lot?

Make your own attempt at making a communication model with better RAIN Framework scores of what you consider the main points of this article

Your feedback is welcome, so we can improve:

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Hi dear ally,

Opening the Door

Scope

<––––––––––––––––––––––––––––––––––––––>

Concrete

Abstract

Summary and Conclusion

Acknowledgements

How you can contribute

Into the Depths

" "It’s more complicated than that." No kidding. You could nail a list of caveats to any sentence in this essay. But the complexity of these problems is no excuse for inaction. It’s an invitation to read more, learn more, come to understand the situation, figure out what you can build, and go build it. That’s why this essay has 400 hyperlinks. It’s meant as a jumping-off point. Jump off it."

If you want to scout further, here are a few paths:

I'd love to hear from you! Contact:

joar.gr@gmail.comWork by people I mostly admire

Inspirations

, including many role models and fields of research relevant for further reliable rationality routine research.

Check out the links in the article above.

More of my work

Website for the Instrumentally project

Prototype

Digital Garden Research